Table of Contents

using discrete probability distributions

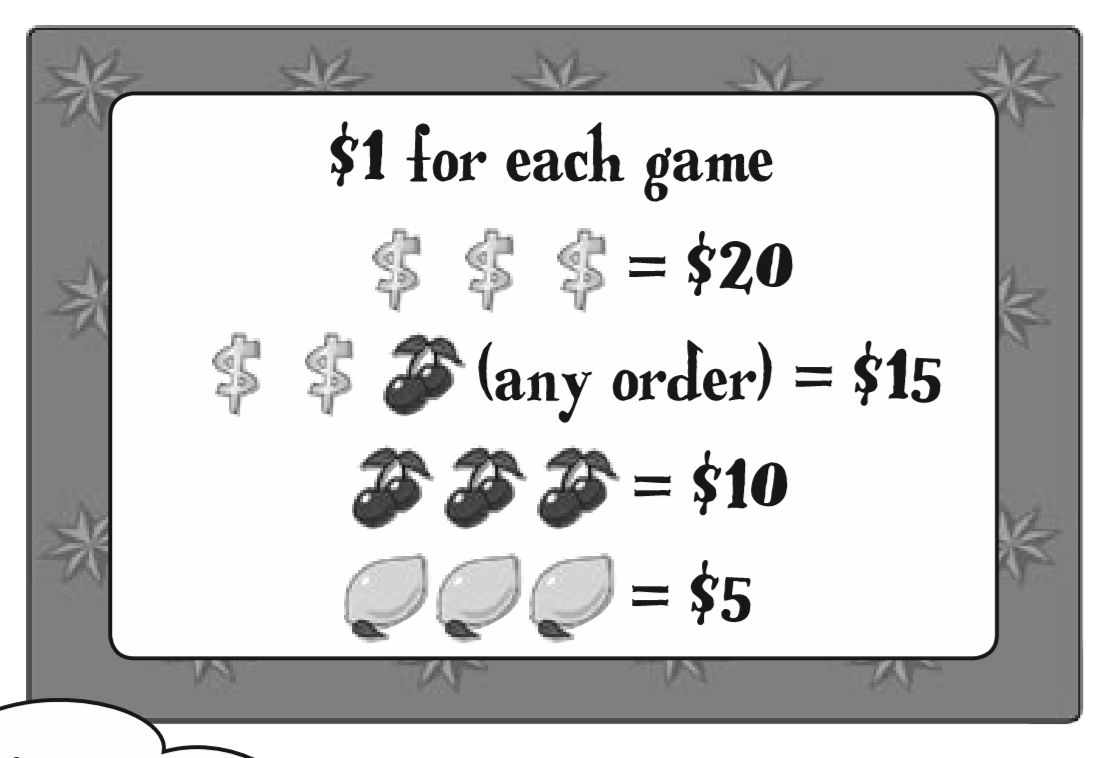

| Dollar(D) | Cherry(C) | Lemon(L) | Other(O) |

| 0.1 | 0.2 | 0.2 | 0.5 |

- Probability of DDD

- Probability of DDC (any order)

- Probability of L

- Probability of C

- Probability of losing

- P(D,D,D) = P(D) * P(D) * P(D)

- 0.1 x 0.1 x 0.1 = 0.001 = 1/1000

- P(D,D,C) + P(D,C,D) + P(C,D,D)

- (0.1 * 0.1 * 0.2) + (0.1 * 0.2 * 0.1) + (0.2 * 0.1 * 0.1) = 6/1000

- P(L,L,L) = P(L) + P(L) + P(L)

- .2 * .2 * .2 = .008 = 8/1000

- P(C,C,C) = P(C) + P(C) + P(C)

- .2 * .2 * .2 = .008 = 8/1000

- for nothing

- 1 - (1/1000 + 6/1000 + 8/1000 + 8/1000) = 1- 23/1000 = 977/1000

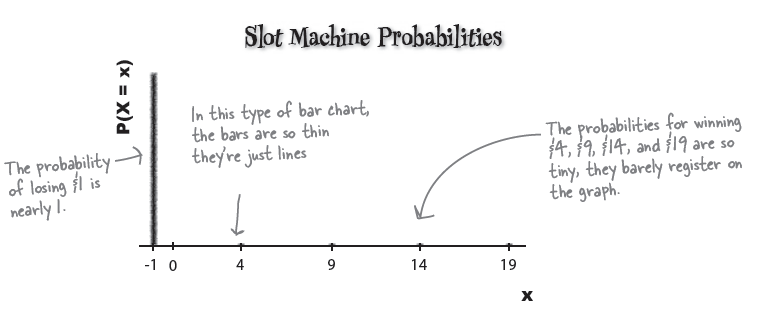

Prob. distribution

| Combination | None | Lemons | Cherries | Dollars/cherry | Dollars |

| Probability | 0.977 977/1000 | 0.008 8/1000 | 0.008 8/1000 | 0.006 6/1000 | 0.001 1/1000 |

| Combination | None | Lemons | Cherries | Dollars/cherry | Dollars |

| Gain | -1 dollar | 4 dollar | 9 dollar | 14 dollar | 19 dollar |

| Probability | 0.977 | 0.008 | 0.008 | 0.006 | 0.001 |

| Combination | None | Lemons | Cherries | Dollars/cherry | Dollars |

| x | -1 | 4 | 9 | 14 | 19 |

| P(X=x) | 0.977 | 0.008 | 0.008 | 0.006 | 0.001 |

Expectation gives you . . .

| Combination | None | Lemons | Cherries | Dollars/cherry | Dollars |

| k | -1 | 4 | 9 | 14 | 19 |

| P(X=x) | 0.977 | 0.008 | 0.008 | 0.006 | 0.001 |

| E(X) | -0.977 | 4*0.008 | 9**0.008 | 14*0.006 | 19*0.001 |

$$E(X) = -0.77$$

k <- c(-1,4,9,14,19) Px <- c(.977,.008,.008,.006,.001) k*Px sum(k*Px) exp <- sum(k*Px) exp

> k <- c(-1,4,9,14,19) > Px <- c(.977,.008,.008,.006,.001) > k*Px [1] -0.977 0.032 0.072 0.084 0.019 > sum(k*Px) [1] -0.77 > exp <- sum(k*Px) > exp [1] -0.77

Variances and probability distributions

$$E(X-\mu)^2 = \sum{(x-\mu)^2}*P(X=x) $$

$$\mu = -.77 $$

| k | -1 | 4 | 9 | 14 | 19 |

| mu | -.77 | -.77 | -.77 | -.77 | -.77 |

| k-mu | -.23 | 4.77 | 9.77 | 14.77 | 19.77 |

| (k-mu)(k-mu) | 0.0529 | 22.7529 | 95.4529 | 218.1529 | 390.8529 |

| p(X=x) | .977 | .008 | .008 | .006 | .001 |

| … | 0.0516833 | 0.1820232 | 0.7636232 | 1.3089174 | 0.3908529 |

| sum(…) | 2.6971 | ||||

a <- c(-1,4,9,14,19) b <- c(.977,.008,.008,.006,.001) a*b sum(a*b) mu <- sum(a*b) a-mu (a-mu)^2 ((a-mu)^2)*b sum(((a-mu)^2)*b) varx <- sum(((a-mu)^2)*b)

> a <- c(-1,4,9,14,19) > b <- c(.977,.008,.008,.006,.001) > a*b [1] -0.977 0.032 0.072 0.084 0.019 > sum(a*b) [1] -0.77 varx > mu <- sum(a*b) > a-mu [1] -0.23 4.77 9.77 14.77 19.77 > (a-mu)^2 [1] 0.0529 22.7529 95.4529 218.1529 390.8529 > ((a-mu)^2)*b [1] 0.0516833 0.1820232 0.7636232 1.3089174 0.3908529 > sum(((a-mu)^2)*b) [1] 2.6971 > varx <- sum(((a-mu)^2)*b) > varx [1] 2.6971

Var(X) = E(X - μ)^2 * P(X=x) = (-1 + 0.77)^2 × 0.977 + (4 + 0.77)^2 × 0.008 + (9 + 0.77)^2 × 0.008 + (14 + 0.77)^2 × 0.006 + (19 + 0.77)^2 × 0.001 = (-0.23)^2 × 0.977 + 4.772 × 0.008 + 9.772 × 0.008 + 14.772 × 0.006 + 19.772 × 0.001 = 0.0516833 + 0.1820232 + 0.7636232 + 1.3089174 + 0.3908529 = 2.6971

Standard deviation

\begin{eqnarray*} \text{s} & = & \sqrt{s^2} \\ & = & \sqrt{2.6971} \end{eqnarray*}

> sqrt(varx) [1] 1.642285

e.g.

Here’s the probability distribution of a random variable X:

| x | 1 | 2 | 3 | 4 | 5 |

| P(X = x) | 0.1 | 0.25 | 0.35 | 0.2 | 0.1 |

$E(X)$ ?

$Var(X) $ ?

d <- c(1,2,3,4,5) e <- c(.1,.25,.35,.2,.1) de <- d*e de sum(de)

d <- c(1,2,3,4,5) e <- c(.1,.25,.35,.2,.1) de <- d*e de sum(de) de.mu <- sum(de) de1 <- (d-de.mu)^2 de1 de2 <- de1*e de2 de.var <- sum(de2) de.var

> d <- c(1,2,3,4,5) > e <- c(.1,.25,.35,.2,.1) > de <- d*e > de [1] 0.10 0.50 1.05 0.80 0.50 > sum(de) [1] 2.95 > > de.mu <- sum(de) > de1 <- (d-de.mu)^2 > de1 [1] 3.8025 0.9025 0.0025 1.1025 4.2025 > > de2 <- de1*e > de2 [1] 0.380250 0.225625 0.000875 0.220500 0.420250 > > de.var <- sum(de2) > de.var [1] 1.2475 >

Fat Dan changed his prices

Another probability distribution.

| Combination | None | Lemons | Cherries | Dollars/cherry | Dollars |

| y | -2 | 23 | 48 | 73 | 98 |

| P(Y = y) | 0.977 | 0.008 | 0.008 | 0.006 | 0.001 |

Original one

| Combination | None | Lemons | Cherries | Dollars/cherry | Dollars |

| k | -1 | 4 | 9 | 14 | 19 |

| P(X=x) | 0.977 | 0.008 | 0.008 | 0.006 | 0.001 |

k <- c(-2, 23, 48, 73, 98) j <- c(0.977,0.008,0.008,0.006,0.001) k*j sum(k*j) kj.mu <- sum(k*j) kj.mu

> k <- c(-2, 23, 48, 73, 98) > j <- c(0.977,0.008,0.008,0.006,0.001) > k*j [1] -1.954 0.184 0.384 0.438 0.098 > sum(k*j) [1] -0.85 > kj.mu <- sum(k*j) > kj.mu [1] -0.85

The expectation is slightly lower, so in the long term, we can expect to lose $ 0.85 each game. The variance is

much larger. This means that we stand to lose more money in the long term on this machine, but there’s less

certainty.

Do

kj.mu <- sum(k*j) kj.var <- sum((k-kj.mu)^2*j) kj.var

> kj.mu <- sum(k*j) > kj.var <- sum((k-kj.mu)^2*j) > kj.var [1] 67.4275 >

Q: So expectation is a lot like the mean. Is there anything for probability distributions that's like the median or mode?

A: You can work out the most likely probability, which would be a bit like the mode, but you won't normally have to do this. When it comes to probability distributions, the measure that statisticians are most interested in is the expectation.

Q: Shouldn't the expectation be one of the values that X can take?

A: It doesn't have to be. Just as the mean of a set of values isn't necessarily the same as one of the values, the expectation of a probability distribution isn't necessarily one of the values X can take.

Q: Are the variance and standard deviation the same as we had before when we were dealing with values?

A: They're the same, except that this time we're dealing with probability distributions. The variance and standard deviation of a set of values are ways of measuring how far values are spread out from the mean. The variance and standard deviation of a probability distribution measure how the probabilities of particular values are dispersed.

Q: I find the concept of E(X - μ)2 confusing. Is it the same as finding E(X - μ) and then squaring the end result?

A: No, these are two different calculations. E(X - μ)2 means that you find the square of X - μ for each value of X, and then find the expectation of all the results. If you calculate E(X - μ) and then square the result, you'll get a completely different answer. Technically speaking, you're working out E((X - μ)2), but it's not often written that way.

Q: So what's the difference between a slot machine with a low variance and one with a high variance?

A: A slot machine with a high variance means that there's a lot more variability in your overall winnings. The amount you could win overall is less predictable. In general, the smaller the variance is, the closer your average winnings per game are likely to be to the expectation. If you play on a slot machine with a larger variance, your overall winnings will be less reliable.

Pool puzzle

\begin{eqnarray*} \text {original win} & = & \text {c(0, 5, 10, 15, 20)} \\ \text {original cost} & = & \text {c(1)} \\ \text {X} & = & \text {the amount of money you receive} \\ \end{eqnarray*}

\begin{eqnarray*} X & = & (\text{original win}) - (\text{original cost}) \\ & = & (\text{original win}) - 1 \\ (\text{original win}) & = & X + 1 \\ \\ Y & = & 5 * (\text{original win}) - (\text{new cost}) \\ & = & 5 * (\text{X + 1}) - 2 \\ & = & 5 * X + 5 - 2 \\ & = & 5 * X + 3 \\ \end{eqnarray*}

E(X) = -.77 and E(Y) = -.85. What is 5 * E(X) + 3?

\begin{eqnarray*} E(X) & = & -.77 \\ E(Y) & = & E(5X+3) \;\;\;\;\; \because Y=5X+3 \\ & = & E(5X) + 3 \\ & = & 5 E(X) + 3 \\ & = & -0.85 \\ \\ \\ Var(Y) & = & Var(5X+3) \\ & = & Var(5X) + Var(3) \\ & = & Var(5X) \\ & = & 5^2 Var(X) \\ & = & 67.4275 \end{eqnarray*}

\begin{eqnarray*} E(aX + b) & = & a \cdot E(X) + b \\ Var(aX + b) & = & a^{2} \cdot Var(X) \\ E(X + Y) & = & E(X) + E(Y) \\ Var(X + Y) & = & Var(X) + Var(Y) \\ \end{eqnarray*}

\begin{eqnarray*} E(X1 + X2 + \ldots Xn) & = & nE(X) \\ Var(X1 + X2 + \ldots Xn) & = & nVar(X) \\ \end{eqnarray*}

\begin{eqnarray*} E(X + Y) & = & E(X) + E(Y) \\ Var(X + Y) & = & Var(X) + Var(Y) \\ E(X - Y) & = & E(X) - E(Y) \\ Var(X - Y) & = & Var(X) + Var(Y) \\ E(aX + bY) & = & aE(X) + bE(Y) \\ Var(aX + bY) & = & a^{2}Var(X) + b^{2}Var(Y) \\ E(aX - bY) & = & aE(X) - bE(Y) \\ Var(aX - bY) & = & a^{2}Var(X) + b^{2}Var(Y) \\ \end{eqnarray*}

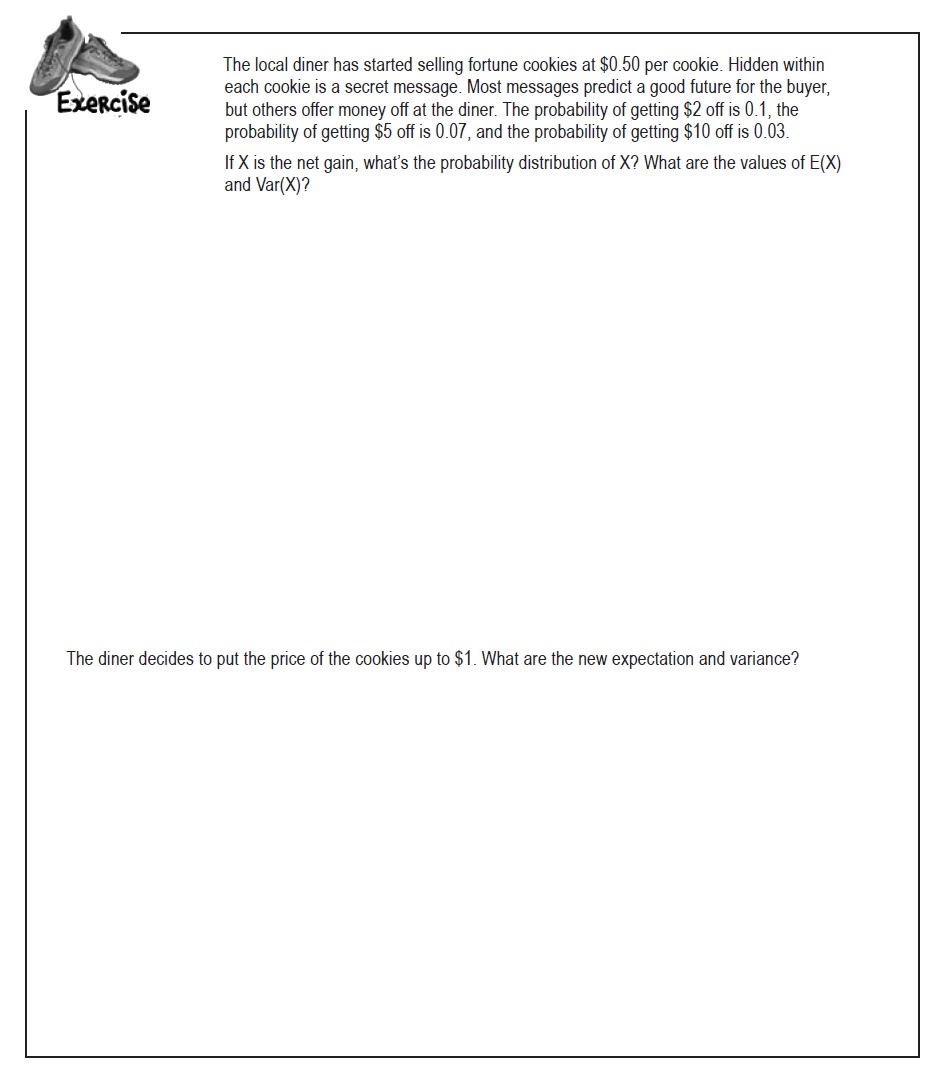

v.fc <- c(-0.5, 1.5, 4.5, 9.5) p.fc <- c(0.8, 0.1, 0.07, 0.03) exp.fc <- sum(v.fc*p.fc) var.fc <- sum((v.fc-exp.fc)^2*p.fc) exp.fc var.fc

v.fc2 <- c(-1, 1, 4, 9) p.fc <- c(0.8, 0.1, 0.07, 0.03) exp.fc2 <- sum(v.fc2*p.fc) var.fc2 <- sum((v.fc2-exp.fc2)^2*p.fc) exp.fc2 var.fc2

> v.fc <- c(-0.5, 1.5, 4.5, 9.5) > p.fc <- c(0.8, 0.1, 0.07, 0.03) > > exp.fc <- sum(v.fc*p.fc) > var.fc <- sum((v.fc-exp.fc)^2*p.fc) > exp.fc [1] 0.35 > var.fc [1] 4.4275 > > > v.fc2 <- c(-1, 1, 4, 9) > p.fc <- c(0.8, 0.1, 0.07, 0.03) > > exp.fc2 <- sum(v.fc2*p.fc) > var.fc2 <- sum((v.fc2-exp.fc2)^2*p.fc) > exp.fc2 [1] -0.15 > var.fc2 [1] 4.4275

위는 각각 계산한 것. 아래는 Y = X - 0.5를 적용해서 계산한 것

\begin{eqnarray*}

E(X-a) & = & E(X) - a \\

E(X- 0.5) & = & E(X) - 0.5 \\

& = & 0.35 - 0.5 \\

& = & -0.15

\end{eqnarray*}

\begin{eqnarray*}

V(X-a) & = & V(X) \\

V(X- 0.5) & = & V(X) \\

& = & 4.4275

\end{eqnarray*}

A restaurant offers two menus, one for weekdays and the other for weekends. Each menu offers four set prices, and the probability distributions for the amount someone pays is as follows:

| Weekday: | ||||

| x | 10 | 15 | 20 | 25 |

| P(X = x) | 0.2 | 0.5 | 0.2 | 0.1 |

| Weekend: | ||||

| y | 15 | 20 | 25 | 30 |

| P(Y = y) | 0.15 | 0.6 | 0.2 | 0.05 |

Who would you expect to pay the restaurant most: a group of 20 eating at the weekend, or a group of 25 eating on a weekday?

x1 <- c(10,15,20,25) x1p <- c(.2,.5,.2,.1) x2 <- c(15,20,25,30) x2p <- c(.15,.6,.2,.05) x1n <- 25 x2n <- 20 x1mu <- sum(x1*x1p) x2mu <- sum(x2*x2p) x1e <- x1mu*x1n x2e <- x2mu*x2n x1e x2e

> x1e [1] 400 > x2e [1] 415 >

x2e will spend more.

e.g.

Sam likes to eat out at two restaurants. Restaurant A is generally more expensive than

restaurant B, but the food quality is generally much better.

Below you’ll find two probability distributions detailing how much Sam tends to spend at each

restaurant. As a general rule, what would you say is the difference in price between the two

restaurants? What’s the variance of this?

| Restaurant A: | ||||

| x | 20 | 30 | 40 | 45 |

| P(X = x) | 0.3 | 0.4 | 0.2 | 0.1 |

| Restaurant B: | |||

| y | 10 | 15 | 18 |

| P(Y = y) | 0.2 | 0.6 | 0.2 |

x3 <- c(20,30,40,45) x3p <- c(.3,.4,.2,.1) x4 <- c(10,15,18) x4p <- c(.2,.6,.2) x3e <- sum(x3*x3p) x4e <- sum(x4*x4p) x3e x4e ## difference in price between the two x3e-x4e x3var <- sum(((x3-x3e)^2)*x3p) x4var <- sum(((x4-x4e)^2)*x4p) x3var x4var ## difference in variance between the two ## == variance range x3var+x4var

> x3 <- c(20,30,40,45) > x3p <- c(.3,.4,.2,.1) > x4 <- c(10,15,18) > x4p <- c(.2,.6,.2) > > x3e <- sum(x3*x3p) > x4e <- sum(x4*x4p) > > x3e [1] 30.5 > x4e [1] 14.6 > ## difference in price between the two > x3e-x4e [1] 15.9 > > > x3var <- sum(((x3-x3e)^2)*x3p) > x4var <- sum(((x4-x4e)^2)*x4p) > > x3var [1] 72.25 > x4var [1] 6.64 > ## difference in variance between the two > ## == variance range > x3var+x4var [1] 78.89

Theorems

| $E(X)$ | $\sum{X}\cdot P(X=x)$ |

| $E(X^2)$ | $\sum{X^{2}}\cdot P(X=x)$ |

| $E(aX + b)$ | $aE(X) + b$ |

| $E(f(X))$ | $\sum{f(X)} \cdot P(X=x)$ |

| $E(aX - bY)$ | $aE(X)-bE(Y)$ |

| $E(X1 + X2 + X3)$ | $E(X) + E(X) + E(X) = 3E(X) \;\;\; $ 1) |

| $Var(X)$ | $E(X-\mu)^{2} = E(X^{2})-E(X)^{2} \;\;\; $ see $\ref{var.theorem.1} $ |

| $Var(c)$ | $0 \;\;\; $ see $\ref{var.theorem.41}$ |

| $Var(aX + b)$ | $a^{2}Var(X) \;\;\; $ see $\ref{var.theorem.2}$ and $\ref{var.theorem.3}$ |

| $Var(aX - bY)$ | $a^{2}Var(X) + b^{2}Var(Y)$ see 1 |

| $Var(X1 + X2 + X3)$ | $Var(X) + Var(X) + Var(X) = 3 Var(X) \;\;\; $ 2) |

| $Var(X1 + X1 + X1)$ | $Var(3X) = 3^2 Var(X) = 9 Var(X) $ |

\begin{eqnarray*} Var(aX - bY) & = & Var(aX + -bY) \\ & = & Var(aX) + Var(-bY) \\ & = & a^{2}Var(X) + b^{2}Var(Y) \end{eqnarray*}

see also why n-1

Variance Theorem 1

\begin{align} Var[X] & = {E{(X-\mu)^2}} \nonumber \\ & = E[(X^2 - 2 X \mu + \mu^2)] \nonumber \\ & = E[X^2] - 2 \mu E[X] + E[\mu^2] \nonumber \\ & = E[X^2] - 2 \mu E[X] + E[\mu^2], \; \text{because E[X]=} \mu \text{, and E[} \mu^2 \text{] = } \mu^2, \nonumber \\ & = E[X^2] - 2 \mu^2 + \mu^2 \nonumber \\ & = E[X^2] - \mu^2 \nonumber \\ & = E[X^2] - E[X]^2 \label{var.theorem.1} \tag{variance theorem 1} \\ \end{align}

Theorem 2: Why square

$ \ref{var.theorem.1} $ 에 따르면

$$ Var[X] = E[X^2] − E[X]^2 $$

이므로

\begin{align*} Var[aX] & = & E[a^2X^2] − (E[aX])^2 \\ & = & a^2 E[X^2] - (a E[X])^2 \\ & = & a^2 E[X^2] - (a^2 E[X]^2) \\ & = & a^2 (E[X^2] - (E[X])^2) \\ & = & a^2 (Var[X]) \label{var.theorem.2} \tag{variance theorem 2} \\ \end{align*}

Theorem 3: Why Var[X+c] = Var[X]

\begin{align} Var[X + c] = Var[X] \nonumber \end{align}

$ \ref{var.theorem.1} $ 에 따르면

$$ Var[X] = E[X^2] − E[X]^2 $$

이므로

\begin{align} Var[X + c] = & E[(X+c)^2] - E[X+c]^2 \nonumber \\ = & E[(X^2 + 2cX + c^2)] \label{tmp.1} \tag{temp 1} \\ & − E(X + c)E(X + c) \label{tmp.2} \tag{temp 2} \\ \end{align}

$ \ref{tmp.1} $ 에서

\begin{align}

E (X^2 + 2cX + c^2) = E (X^2) + 2cE(X) + c^2 \\

\end{align}

그리고 $\ref{tmp.2}$ 에서 보면

\begin{align}

E(X + c)E(X + c) = & E(X)(E(X + c)) + E(c)(E(X + c)) \nonumber \\

= & E(X)^2 + cE(X) + cE(X) + c^2 \nonumber \\

= & E(X)^2 + 2cE(X) + c^2 \\

\end{align}

위의 둘을 모두 보면

\begin{align}

Var(X + c) = & E(X^2) + 2cE(X) + c^2 − E(X)^2 − 2cE(X) − c^2 \nonumber \\

= & E(X^2) − E(X)^2 \nonumber \\

= & Var(X) \label{var.theorem.3} \tag{variance theorem 3} \\

\end{align}

Theorem 4: Var(c) = 0

\begin{align}

Var(X) = & 0; \;\;\;\; \text{if X = c, a constant } \label{var.theorem.41} \tag{variance theorem 4.1} \\

\text{otherwise } \nonumber \\

Var(X) \ge & 0 \label{var.theorem.42} \tag{variance theorem 4.2} \\

\end{align}

Variance는 기본적으로 아래와 같다. 이 때 $X=c$ 라고 (c=상수) 하면

\begin{align}

Var(X) & = E[(X − E(X))^2] \text{ because X = c, and E(X) = c} \nonumber \\

& = E[(c-c)^2] \nonumber \\

& = 0 \nonumber \\

\text{if X } \ne \text{c, then} \nonumber \\

& \text{because } (X − E(X))^2 \ge 0 \nonumber \\

& Var(X) \ge 0 \nonumber

\end{align}

Theorem 5: Var(X+Y)

\begin{align} Var(X + Y) = Var(X) + Var(Y) + 2Cov(X, Y) \label{var.theorem.51} \tag{variance theorem 5-1} \\ Var(X − Y) = Var(X) + Var(Y) − 2Cov(X, Y) \label{var.theorem.52} \tag{variance theorem 5-2} \\ \end{align}

$ \ref{var.theorem.1} $ 에서

$$ Var[X] = E[X^2] - E[(X)]^2 $$

이므로 X ← X+Y 를 대입해보면

\begin{align}

Var[X+Y] = & E[(X + Y)^2] \label{tmp.03} \tag{temp 3} \\

- & E[(X + Y)]^2 \label{tmp.04} \tag{temp 4}

\end{align}

$\ref{tmp.03}$과 $\ref{tmp.04}$ 는 아래처럼 정리된다

\begin{align*} & E[(X + Y)^2] = E[X^2 + 2XY + Y^2] = E[X^2] + 2E[XY] + E[Y^2] \\ - & [E(X + Y)]^2 = [E(X) + E(Y)]^2 = E(X)^2 + 2E(X)E(Y) + E(Y)^2 \\ \end{align*}

각 줄의 가장 오른쪽 정리식을 보면,

\begin{align*} Var[(X+Y)] = & E[X^2] & + & 2E[XY] & + & E[Y^2] \\ - & E(X)^2 & - & 2E(X)E(Y) & - & E(Y)^2 \\ & Var[X] & + & 2 E[XY]-2E(X)E(Y) & + & Var[Y] \\ \end{align*}

가운데 부분은

\begin{align}

E(XY)- E(X)E(Y) = Cov[X,Y] \label{cov} \tag{covariance} \\

\end{align}

따라서

\begin{align*}

Var[(X+Y)] = Var[X] + 2 Cov[X,Y] + Var[Y] \\

\end{align*}

Questions

Which one is correct?

\begin{align} Var(X+X) & = Var(X) + Var(X) & = 2 * Var(X) \label{tmp.05} \tag{1} \\ Var(X+X) & = Var(2X) & = 2^2 * Var(X) \label{tmp.06} \tag{2} \end{align}

$\ref{var.theorem.51}$ 을 다시 보면

\begin{align*}

Var(X+Y) = Var(X) + 2 Cov(X,Y) + Var(Y) \\

\end{align*}

X와 Y가 independent 한 event라고 (group) 하면

$ Cov(X,Y) = 0 $ 이므로

\begin{align*}

Var[(X+Y)] = Var[X] + Var[Y] \\

\end{align*}

보통 X1, X2 집합은 같은 특성을 (statistic) 갖는 두 독립적인 집합을 의미하므로

\begin{align*}

Var(X1 + X2) = Var(X1) + Var(X2) \\

\end{align*}

X1, X2는 같은 분포를 갖는 서로 독립적인 집합이고 (가령 각 집합은 n=10000이고 mean=0, var=4의 특성을 갖는) 이 때의 두 집합을 합한 집합의 Variance는 각 Variance를 더한 값과 같다는 뜻. 반면에 아래는 동일한 집합을 선형적인 관계로 옮긴 것 (X to 2X).

\begin{align} Var(X1 + X1) & = Var(2*X1) \nonumber \\ & = 2^2 Var(X1) \nonumber \\ & = 4 Var(X1) \nonumber \\ \end{align}

따라서 수식 $(\ref{tmp.06})$ 가 참이다.

이것은 아래처럼 생각해 볼 수도 있다.

$\ref{var.theorem.51}$ 에서 $Y$ 대신에 $X$를 대입하면

\begin{align*}

Var(X + X) & = Var(X) + 2 Cov(X, X) + Var(X) \\

& \;\;\;\;\; \text{because } \\

& \;\;\;\;\; \text{according to the below } \ref{cov.xx}, \\

& \;\;\;\;\; Cov(X,X) = Var(X) \\

& = Var(X) + 2 Var(X) + Var(X) \\

& = 4 Var(X)

\end{align*}

\begin{align} Cov[X,Y] & = E(XY) - E(X)E(Y) \nonumber \\ Cov[X,X] & = E(XX) - E(X)E(X) \nonumber \\ & = E(X^2) - E(X)^2 \nonumber \\ & = V(X) \label{cov.xx} \tag{3} \end{align}

e.gs in R

R에서 이를 살펴보면

# variance theorem 4-1, 4-2

# http://commres.net/wiki/variance_theorem

# need a function, rnorm2

rnorm2 <- function(n,mean,sd) { mean+sd*scale(rnorm(n)) }

m <- 0

v <- 1

n <- 10000

set.seed(1)

x1 <- rnorm2(n, m, sqrt(v))

x2 <- rnorm2(n, m, sqrt(v))

x3 <- rnorm2(n, m, sqrt(v))

m.x1 <- mean(x1)

m.x2 <- mean(x2)

m.x3 <- mean(x3)

m.x1

m.x2

m.x3

v.x1 <- var(x1)

v.x2 <- var(x2)

v.x3 <- var(x3)

v.x1

v.x2

v.x3

v.12 <- var(x1 + x2)

v.12

######################################

## v.12 should be near var(x1)+var(x2)

######################################

## 정확히 2*v가 아닌 이유는 x1, x2가

## 아주 약간은 (random하게) dependent하기 때문

##(혹은 상관관계가 있기 때문)

## theorem 5-1 에서

## var(x1+x2) = var(x1)+var(x2)+ (2*cov(x1,x2))

cov.x1x2 <- cov(x1,x2)

var(x1 + x2)

var(x1) + var(x2) + (2*cov.x1x2)

# theorem 5-2 도 확인

var(x1 - x2)

var(x1) + var(x2) - (2 * cov.x1x2)

# only when x1, x2 are independent (orthogonal)

# var(x1+x2) == var(x1) + var(x2)

########################################

## 그리고 동일한 (독립적이지 않은) 집합 X1에 대해서는

v.11 <- var(x1 + x1)

# var(2*x1) = 2^2 var(X1)

v.11