Table of Contents

Geometric Binomial and Poisson Distributions

정리

기하분포, 이항분포, 포아송분포

\begin{align*}

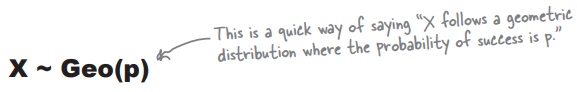

\text{Geometric Distribution: } \;\;\; \text{X} & \thicksim Geo(p) \\

p(X = k) & = q^{k-1} \cdot p \\

E\left[ X \right] & = \frac{1}{p} \\

V\left[ X \right] & = \frac{q}{p^2} \\

\\

\text{Binomial Distribution: } \;\;\; \text{X} & \thicksim B(n, p) \\

p(X = r) & = \binom{n}{r} \cdot p^{r} \cdot q^{n-r} \\

E\left[ X \right] & = {n}{p} \\

V\left[ X \right] & = {n}{p}{q} \\

\\

\text{Poisson Distribution: } \;\;\; \text{X} & \thicksim P( \lambda ) \\

P(X=r) & = e^{- \lambda} \cdot \dfrac{\lambda^{r}} {r!} \\

E\left[ X \right] & = \lambda \\

V\left[ X \right] & = \lambda \\

\end{align*}

Geometric Distributions

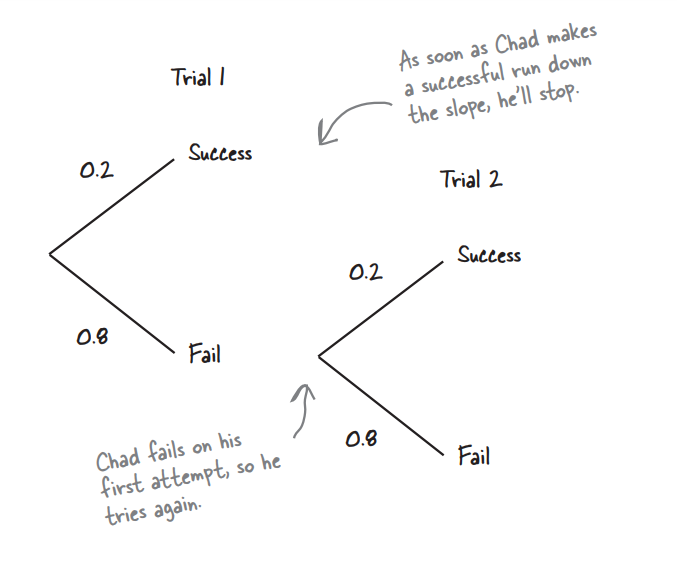

The probability of Chad making a clear run down the slope is 0.2, and he's going to keep on trying until he succeeds. After he’s made his first successful run down the slopes, he’s going to stop snowboarding, and head back to the lodge triumphantly

It’s time to exercise your probability skills. The probability of Chad making a successful run down the slopes is 0.2 for any given trial (assume trials are independent). What’s the probability he’ll need two trials? What’s the probability he’ll make a successful run down the slope in one or two trials? Remember, when he’s had his first successful run, he’s going to stop.

Hint: You may want to draw a probability tree to help visualize the problem.

P(X = 1) = P(success in the first trial) = 0.2

P(X = 2) = P(success in the second trial union failure in the first trial) = 0.8 * 0.2 = 0.16

1회 혹은 2회에서 성공할 확률

P(X <= 2) = P(X = 1) + P(X = 2) = 0.2 + 0.16 = 0.36

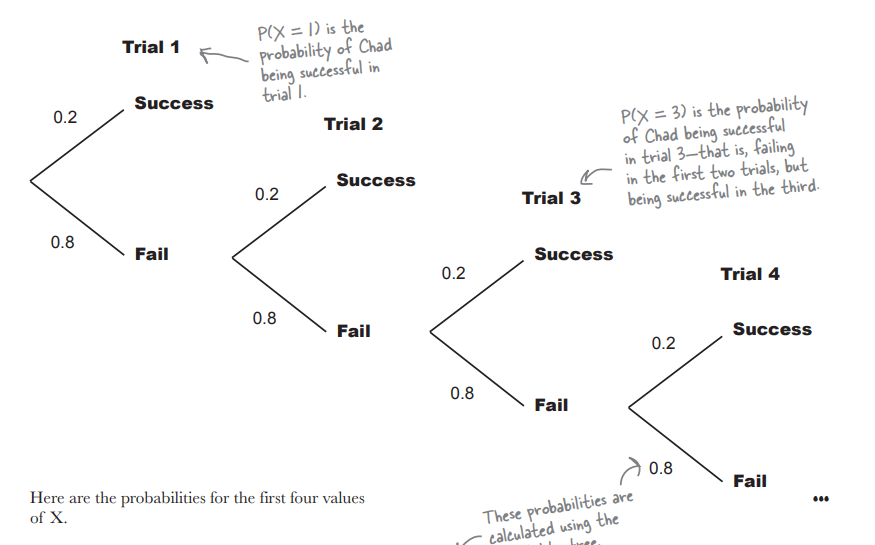

| X | P(X=x) |

| 1 | 0.2 |

| 2 | 0.8 * 0.2 = 0.16 |

| 3 | 0.8 * 0.8 * 0.2 = 0.128 |

| 4 | 0.8 * 0.8 * 0.8 * 0.2 = 0.1024 |

| . . . | . . . . . |

| X | P(X=x) | Power of 0.8 | Power of 0.2 |

| 1 | 0.80 * 0.2 | 0 | 1 |

| 2 | 0.81 * 0.2 | 1 | 1 |

| 3 | 0.82 * 0.2 | 2 | 1 |

| 4 | 0.83 * 0.2 | 3 | 1 |

| 5 | 0.84 * 0.2 | 4 | 1 |

| r | . . . . . | r - 1 | 1 |

$P(X = r) = 0.8^{r-1} × 0.2$

$P(X = r) = q^{r-1} × p $

This formula is called the geometric distribution.

- You run a series of independent trials. * (각 시행이 독립적임 = 이번 시행이 이전 시행과 상관없이 일어남)

- There can be either a success or failure for each trial, and the probability of success is the same for each trial. (성공/실패만 일어나는 상황에서, 성공하는 확률은 p로 실행햇수동안 일정)

- The main thing you’re interested in is how many trials are needed in order to get the first successful outcome. (성공하면 중단하고 성공할 때까지의 확률을 분포로 봄)

$ P(X=r) = {p \cdot q^{r-1}} $

$ P(X=r) = {p \cdot (1-p)^{r-1}} $

p = 0.20 n = 29 ## geometric . . . . ## note that it starts with 0 rather than 1 ## since the function uses p * q^(r), ## rather than p * q^(r-1) dgeom(x = 0:n, prob = p) # hist(dgeom(x = 0:n, prob = p)) barplot(dgeom(x=0:n, p))

> p = 0.20 > n = 29 > # exact > dgeom(0:n, prob = p) [1] 0.2000000000 0.1600000000 0.1280000000 0.1024000000 0.0819200000 0.0655360000 0.0524288000 [8] 0.0419430400 0.0335544320 0.0268435456 0.0214748365 0.0171798692 0.0137438953 0.0109951163 [15] 0.0087960930 0.0070368744 0.0056294995 0.0045035996 0.0036028797 0.0028823038 0.0023058430 [22] 0.0018446744 0.0014757395 0.0011805916 0.0009444733 0.0007555786 0.0006044629 0.0004835703 [29] 0.0003868563 0.0003094850 > > # hist(dgeom(x = 0:n, prob = p)) > barplot(dgeom(x=0:n, p))

{{:b:head_first_statistics:pasted:20191030-023820.png}}

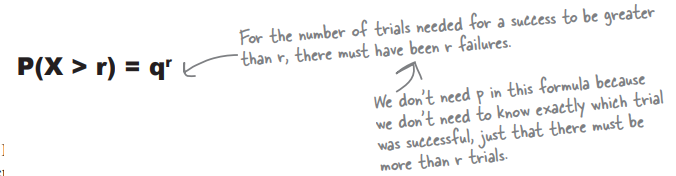

r번 시도한 이후, 그 이후 어디서든지 간에 성공을 얻을 확률

$$ P(X > r) = q^{r} $$

예, 20번 시도 후에 어디선가 성공할 확률은?

Solution.

- 21번째 성공 + 22번째 + 23번째 + . . . .

- 위는 구할 수 없음

- 따라서

- 전체 확률이 1이고 20번째까지 성공한 확률을 1에서 빼면 원하는 확률이 됨

- 1 - (1번째 성공 + 2번째 성공 + . . . + 20번째 성공)

- 그런데 이것은

- 20번까지는 실패하는 확률 = $q^{r} $ 과 같다

p <- .2 q <- 1-p n <- 19 s <- dgeom(x = 0:n, prob = p) # 20번째까지 성공할 확률을 모두 더한 확률 sum(s) # 따라서 아래는 20번 이후 어디서든지 간에서 성공할 확률 1-sum(s) ## 혹은 (교재가 이야기하는) 20번까지 실패하는 확률 q^20

> p <- .2 > q <- 1-p > n <- 19 > s <- dgeom(x = 0:n, prob = p) > # 20번째까지 성공할 확률 > sum(s) [1] 0.9884708 > # 따라서 아래는 20번 이후 어디서든지 간에서 성공할 확률 > 1-sum(s) [1] 0.01152922 > ## 혹은 (교재가 이야기하는) 20번까지 실패하는 확률 > q^20 [1] 0.01152922 >

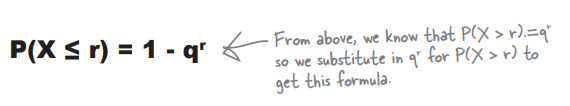

그렇다면

r 번 이전에 성공이 있을 확률은? = r 번까지의 실패할 확률의 보수

$$ P(X \le r) = 1 - q^{r} $$

혹은 1번째 성공 + 2번째 성공 + . . . + r 번째 성공으로 구해도 된다

# r = 20 이라고 하면 p <- .2 q <- 1-p n <- 19 s <- dgeom(x = 0:n, prob = p) sum(s)

Note that

$$P(X > r) + P(X \le r) = 1 $$

Expected value

X가 성공할 확률 p를 가진 Geometric distribution을 따른다 :: $X \sim \text{Geo}(p)$

Reminding . . . Expected value in discrete probability

$E(X) = \sum x*P(X=x)$

| textbook | x | P(X = x) | xP(X = x) | xP(X ≤ x): $E(X) = \sum (x*P(X=x))$ |

| r code | trial | px ← q^(trial-1)*p | npx ← trial*(q^(trial-1))*p | plex ← cumsum(trial*(q^(trial-1))*p) |

px | npx ← trial*px | plex ← cumsum(npx) |

||

| x번째 (trial번째) 성공할 확률 | x번째의 기대치 (주사위 경우처럼) | 그 x번째까지 성공할 확률에 대한 기대값 |

- 우리가 작업하고 있는 채드의 슬로프 타기 예가 얼른 이해가 안된다면 아래 workout의 예를 들어 본다.

| x | p(x) px | npx.0 | npx = weighted probability at a given spot | plex.0 | plex | |

|---|---|---|---|---|---|---|

| 0 | 0.1 | 0 * 0.1 | 0.00 | 0.00 | 0.00 | |

| 1 | 0.15 | 1 * 0.15 | 0.15 | 0.00 + 0.15 | 0.15 | |

| 2 | 0.4 | 2 * 0.4 | 0.80 | 0.00 + 0.15 + 0.80 | 0.95 | |

| 3 | 0.25 | 3 * 0.25 | 0.75 | 0.00 + 0.15 + 0.80 + 0.75 | 1.7 | |

| 4 | 0.1 | 4 * 0.1 | 0.40 | 0.00 + 0.15 + 0.80 + 0.75 + 0.40 | 2.1 | = this is E(x) |

- x 일주일에 내가 갈 운동횟수 (workout frequency, 0 to 4)

- px 각 횟수에 대한 probability

- npx weighted probability

- plex cumulative sum of npx (to find out the below last one)

- sum of npx = 2.1 = mean of all = expected value of x = E(x)

p <- .2 q <- 1-p trial <- c(1:8) px <- q^(trial-1)*p px ## npx <- trial*(q^(trial-1))*p ## 위는 아래와 같음 npx <- trial*px npx ## plex <- cumsum(trial*(q^(trial-1))*p) ## 위는 아래와 같음 plex <- cumsum(npx) plex sumgeod <- data.frame(trial,px,npx,plex) round(sumgeod,3)

> p <- .2 > q <- 1-p > trial <- c(1,2,3,4,5,6,7,8) > px <- q^(trial-1)*p > px [1] 0.20000000 0.16000000 0.12800000 0.10240000 0.08192000 0.06553600 0.05242880 0.04194304 > npx <- trial*(q^(trial-1))*p > npx [1] 0.2000000 0.3200000 0.3840000 0.4096000 0.4096000 0.3932160 0.3670016 0.3355443 > plex <- cumsum(trial*(q^(trial-1))*p) > plex [1] 0.200000 0.520000 0.904000 1.313600 1.723200 2.116416 2.483418 2.818962 > sumgeod <- data.frame(trial,px,npx,plex) > round(sumgeod,3) trial px npx plex 1 1 0.200 0.200 0.200 2 2 0.160 0.320 0.520 3 3 0.128 0.384 0.904 4 4 0.102 0.410 1.314 5 5 0.082 0.410 1.723 6 6 0.066 0.393 2.116 7 7 0.052 0.367 2.483 8 8 0.042 0.336 2.819 >

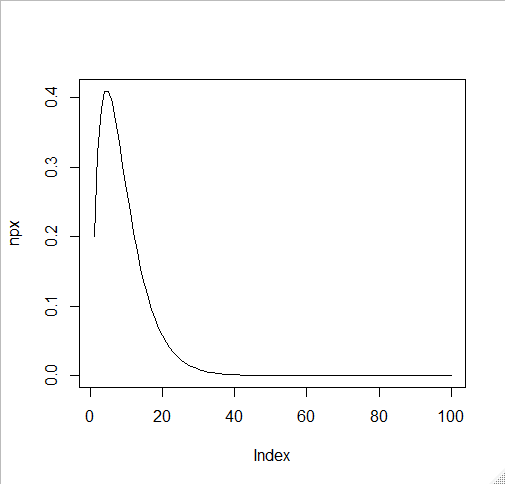

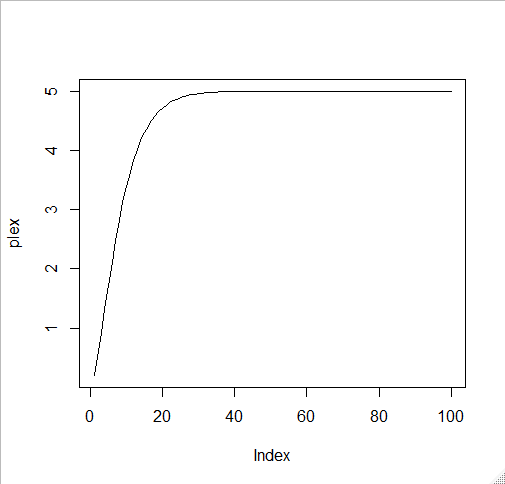

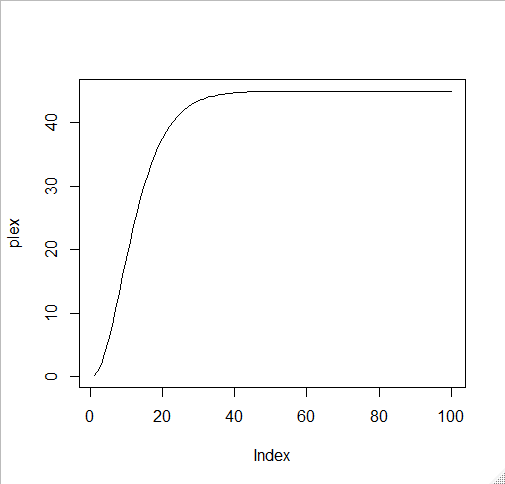

- 아래의 예는 위의 workout 예처럼 횟수가 0-4로 정해져 있지 않고 계속 진행됨 (0-무한대)

- 하지만 여기서는 100 까지로 한정 (1:100)

- 각 지점에서의 probability = geometric probability = q^(trial-1)*p = px

- 각 지점에서의 weighted prob = trial * px = npx

- 각 단계에서의 기대값을 구하기 위한 누적합계 cumsum(npx) = plex

- 아래 그림에서 plex는 각 단계의 probability density를 더해온 값을 말한다.

- 그림이 암시하는 것처럼 오른 쪽으로 한 없이 가면서 생기는 그래프의 용적은 기대값이 된다.

p <- .2 q <- 1-p trial <- c(1:100) px <- q^(trial-1)*p px npx <- trial*px npx ## plex <- cumsum(trial*(q^(trial-1))*p) ## 위는 아래와 같음 plex <- cumsum(npx) plex sumgeod <- data.frame(trial,px,npx,plex) sumgeod plot(npx, type="l") plot(plex, type="l")

>

> p <- .2

> q <- 1-p

> trial <- c(1:100)

> px <- q^(trial-1)*p

> px

[1] 2.000000e-01 1.600000e-01 1.280000e-01 1.024000e-01

[5] 8.192000e-02 6.553600e-02 5.242880e-02 4.194304e-02

[9] 3.355443e-02 2.684355e-02 2.147484e-02 1.717987e-02

[13] 1.374390e-02 1.099512e-02 8.796093e-03 7.036874e-03

[17] 5.629500e-03 4.503600e-03 3.602880e-03 2.882304e-03

[21] 2.305843e-03 1.844674e-03 1.475740e-03 1.180592e-03

[25] 9.444733e-04 7.555786e-04 6.044629e-04 4.835703e-04

[29] 3.868563e-04 3.094850e-04 2.475880e-04 1.980704e-04

[33] 1.584563e-04 1.267651e-04 1.014120e-04 8.112964e-05

[37] 6.490371e-05 5.192297e-05 4.153837e-05 3.323070e-05

[41] 2.658456e-05 2.126765e-05 1.701412e-05 1.361129e-05

[45] 1.088904e-05 8.711229e-06 6.968983e-06 5.575186e-06

[49] 4.460149e-06 3.568119e-06 2.854495e-06 2.283596e-06

[53] 1.826877e-06 1.461502e-06 1.169201e-06 9.353610e-07

[57] 7.482888e-07 5.986311e-07 4.789049e-07 3.831239e-07

[61] 3.064991e-07 2.451993e-07 1.961594e-07 1.569275e-07

[65] 1.255420e-07 1.004336e-07 8.034690e-08 6.427752e-08

[69] 5.142202e-08 4.113761e-08 3.291009e-08 2.632807e-08

[73] 2.106246e-08 1.684997e-08 1.347997e-08 1.078398e-08

[77] 8.627183e-09 6.901746e-09 5.521397e-09 4.417118e-09

[81] 3.533694e-09 2.826955e-09 2.261564e-09 1.809251e-09

[85] 1.447401e-09 1.157921e-09 9.263367e-10 7.410694e-10

[89] 5.928555e-10 4.742844e-10 3.794275e-10 3.035420e-10

[93] 2.428336e-10 1.942669e-10 1.554135e-10 1.243308e-10

[97] 9.946465e-11 7.957172e-11 6.365737e-11 5.092590e-11

> npx <- trial*px

> npx

[1] 2.000000e-01 3.200000e-01 3.840000e-01 4.096000e-01

[5] 4.096000e-01 3.932160e-01 3.670016e-01 3.355443e-01

[9] 3.019899e-01 2.684355e-01 2.362232e-01 2.061584e-01

[13] 1.786706e-01 1.539316e-01 1.319414e-01 1.125900e-01

[17] 9.570149e-02 8.106479e-02 6.845471e-02 5.764608e-02

[21] 4.842270e-02 4.058284e-02 3.394201e-02 2.833420e-02

[25] 2.361183e-02 1.964504e-02 1.632050e-02 1.353997e-02

[29] 1.121883e-02 9.284550e-03 7.675228e-03 6.338253e-03

[33] 5.229059e-03 4.310012e-03 3.549422e-03 2.920667e-03

[37] 2.401437e-03 1.973073e-03 1.619997e-03 1.329228e-03

[41] 1.089967e-03 8.932412e-04 7.316071e-04 5.988970e-04

[45] 4.900066e-04 4.007165e-04 3.275422e-04 2.676089e-04

[49] 2.185473e-04 1.784060e-04 1.455793e-04 1.187470e-04

[53] 9.682448e-05 7.892109e-05 6.430607e-05 5.238022e-05

[57] 4.265246e-05 3.472060e-05 2.825539e-05 2.298743e-05

[61] 1.869645e-05 1.520236e-05 1.235804e-05 1.004336e-05

[65] 8.160232e-06 6.628619e-06 5.383242e-06 4.370871e-06

[69] 3.548119e-06 2.879633e-06 2.336616e-06 1.895621e-06

[73] 1.537559e-06 1.246898e-06 1.010998e-06 8.195824e-07

[77] 6.642931e-07 5.383362e-07 4.361904e-07 3.533694e-07

[81] 2.862292e-07 2.318103e-07 1.877098e-07 1.519771e-07

[85] 1.230291e-07 9.958120e-08 8.059129e-08 6.521410e-08

[89] 5.276414e-08 4.268560e-08 3.452790e-08 2.792587e-08

[93] 2.258353e-08 1.826109e-08 1.476428e-08 1.193576e-08

[97] 9.648071e-09 7.798028e-09 6.302080e-09 5.092590e-09

> ## plex <- cumsum(trial*(q^(trial-1))*p)

> ## 위는 아래와 같음

> plex <- cumsum(npx)

> plex

[1] 0.200000 0.520000 0.904000 1.313600 1.723200 2.116416 2.483418

[8] 2.818962 3.120952 3.389387 3.625610 3.831769 4.010440 4.164371

[15] 4.296313 4.408903 4.504604 4.585669 4.654124 4.711770 4.760192

[22] 4.800775 4.834717 4.863051 4.886663 4.906308 4.922629 4.936169

[29] 4.947388 4.956672 4.964347 4.970686 4.975915 4.980225 4.983774

[36] 4.986695 4.989096 4.991069 4.992689 4.994018 4.995108 4.996002

[43] 4.996733 4.997332 4.997822 4.998223 4.998550 4.998818 4.999037

[50] 4.999215 4.999361 4.999479 4.999576 4.999655 4.999719 4.999772

[57] 4.999814 4.999849 4.999877 4.999900 4.999919 4.999934 4.999947

[64] 4.999957 4.999965 4.999971 4.999977 4.999981 4.999985 4.999988

[71] 4.999990 4.999992 4.999993 4.999995 4.999996 4.999997 4.999997

[78] 4.999998 4.999998 4.999998 4.999999 4.999999 4.999999 4.999999

[85] 4.999999 5.000000 5.000000 5.000000 5.000000 5.000000 5.000000

[92] 5.000000 5.000000 5.000000 5.000000 5.000000 5.000000 5.000000

[99] 5.000000 5.000000

> sumgeod <- data.frame(trial,px,npx,plex)

> sumgeod

trial px npx plex

1 1 2.000000e-01 2.000000e-01 0.200000

2 2 1.600000e-01 3.200000e-01 0.520000

3 3 1.280000e-01 3.840000e-01 0.904000

4 4 1.024000e-01 4.096000e-01 1.313600

5 5 8.192000e-02 4.096000e-01 1.723200

6 6 6.553600e-02 3.932160e-01 2.116416

7 7 5.242880e-02 3.670016e-01 2.483418

8 8 4.194304e-02 3.355443e-01 2.818962

9 9 3.355443e-02 3.019899e-01 3.120952

10 10 2.684355e-02 2.684355e-01 3.389387

11 11 2.147484e-02 2.362232e-01 3.625610

12 12 1.717987e-02 2.061584e-01 3.831769

13 13 1.374390e-02 1.786706e-01 4.010440

14 14 1.099512e-02 1.539316e-01 4.164371

15 15 8.796093e-03 1.319414e-01 4.296313

16 16 7.036874e-03 1.125900e-01 4.408903

17 17 5.629500e-03 9.570149e-02 4.504604

18 18 4.503600e-03 8.106479e-02 4.585669

19 19 3.602880e-03 6.845471e-02 4.654124

20 20 2.882304e-03 5.764608e-02 4.711770

21 21 2.305843e-03 4.842270e-02 4.760192

22 22 1.844674e-03 4.058284e-02 4.800775

23 23 1.475740e-03 3.394201e-02 4.834717

24 24 1.180592e-03 2.833420e-02 4.863051

25 25 9.444733e-04 2.361183e-02 4.886663

26 26 7.555786e-04 1.964504e-02 4.906308

27 27 6.044629e-04 1.632050e-02 4.922629

28 28 4.835703e-04 1.353997e-02 4.936169

29 29 3.868563e-04 1.121883e-02 4.947388

30 30 3.094850e-04 9.284550e-03 4.956672

31 31 2.475880e-04 7.675228e-03 4.964347

32 32 1.980704e-04 6.338253e-03 4.970686

33 33 1.584563e-04 5.229059e-03 4.975915

34 34 1.267651e-04 4.310012e-03 4.980225

35 35 1.014120e-04 3.549422e-03 4.983774

36 36 8.112964e-05 2.920667e-03 4.986695

37 37 6.490371e-05 2.401437e-03 4.989096

38 38 5.192297e-05 1.973073e-03 4.991069

39 39 4.153837e-05 1.619997e-03 4.992689

40 40 3.323070e-05 1.329228e-03 4.994018

41 41 2.658456e-05 1.089967e-03 4.995108

42 42 2.126765e-05 8.932412e-04 4.996002

43 43 1.701412e-05 7.316071e-04 4.996733

44 44 1.361129e-05 5.988970e-04 4.997332

45 45 1.088904e-05 4.900066e-04 4.997822

46 46 8.711229e-06 4.007165e-04 4.998223

47 47 6.968983e-06 3.275422e-04 4.998550

48 48 5.575186e-06 2.676089e-04 4.998818

49 49 4.460149e-06 2.185473e-04 4.999037

50 50 3.568119e-06 1.784060e-04 4.999215

51 51 2.854495e-06 1.455793e-04 4.999361

52 52 2.283596e-06 1.187470e-04 4.999479

53 53 1.826877e-06 9.682448e-05 4.999576

54 54 1.461502e-06 7.892109e-05 4.999655

55 55 1.169201e-06 6.430607e-05 4.999719

56 56 9.353610e-07 5.238022e-05 4.999772

57 57 7.482888e-07 4.265246e-05 4.999814

58 58 5.986311e-07 3.472060e-05 4.999849

59 59 4.789049e-07 2.825539e-05 4.999877

60 60 3.831239e-07 2.298743e-05 4.999900

61 61 3.064991e-07 1.869645e-05 4.999919

62 62 2.451993e-07 1.520236e-05 4.999934

63 63 1.961594e-07 1.235804e-05 4.999947

64 64 1.569275e-07 1.004336e-05 4.999957

65 65 1.255420e-07 8.160232e-06 4.999965

66 66 1.004336e-07 6.628619e-06 4.999971

67 67 8.034690e-08 5.383242e-06 4.999977

68 68 6.427752e-08 4.370871e-06 4.999981

69 69 5.142202e-08 3.548119e-06 4.999985

70 70 4.113761e-08 2.879633e-06 4.999988

71 71 3.291009e-08 2.336616e-06 4.999990

72 72 2.632807e-08 1.895621e-06 4.999992

73 73 2.106246e-08 1.537559e-06 4.999993

74 74 1.684997e-08 1.246898e-06 4.999995

75 75 1.347997e-08 1.010998e-06 4.999996

76 76 1.078398e-08 8.195824e-07 4.999997

77 77 8.627183e-09 6.642931e-07 4.999997

78 78 6.901746e-09 5.383362e-07 4.999998

79 79 5.521397e-09 4.361904e-07 4.999998

80 80 4.417118e-09 3.533694e-07 4.999998

81 81 3.533694e-09 2.862292e-07 4.999999

82 82 2.826955e-09 2.318103e-07 4.999999

83 83 2.261564e-09 1.877098e-07 4.999999

84 84 1.809251e-09 1.519771e-07 4.999999

85 85 1.447401e-09 1.230291e-07 4.999999

86 86 1.157921e-09 9.958120e-08 5.000000 ###########

87 87 9.263367e-10 8.059129e-08 5.000000

88 88 7.410694e-10 6.521410e-08 5.000000

89 89 5.928555e-10 5.276414e-08 5.000000

90 90 4.742844e-10 4.268560e-08 5.000000

91 91 3.794275e-10 3.452790e-08 5.000000

92 92 3.035420e-10 2.792587e-08 5.000000

93 93 2.428336e-10 2.258353e-08 5.000000

94 94 1.942669e-10 1.826109e-08 5.000000

95 95 1.554135e-10 1.476428e-08 5.000000

96 96 1.243308e-10 1.193576e-08 5.000000

97 97 9.946465e-11 9.648071e-09 5.000000

98 98 7.957172e-11 7.798028e-09 5.000000

99 99 6.365737e-11 6.302080e-09 5.000000

100 100 5.092590e-11 5.092590e-09 5.000000

> plot(npx, type="l")

> plot(plex, type="l")

- 기댓값이 86번째 부터는 더이상 늘지 않고

- 계산된 값을 보면 5로 수렴한다.

- workout 예처럼 다섯가지의 순서가 있는 것이 아니라서

- 평균을 어떻게 나오나 보기 위해서 100까지 해 봤지만

- 86번째 이후에는 평균값이 더 늘지 않는다 (5에서)

- 따라서 위의 geometric distribution에서의 기대값은 5이다.

- 그런데 이 기대값은 아래처럼 구할 수 있다.

- 위에서 $X \sim \text{Geo}(p)$ 일때, 기대값은 $E(X) = \dfrac{1}{p}$

- 아래는 그 증명이다.

Proof

Variance proof

기하분포의 분산 증명

아래는 R에서의 시뮬레이션

\begin{eqnarray*}

Var(X) & = & E((X-E(X))^{2}) \\

& = & E(X^{2} - 2XE(X) + E(X)^{2}) \\

& = & E(X^{2}) - E(2X)(X) + E(X)^{2} \\

& = & E(X^{2}) - 2E(X)E(X) + E(X)^{2} \\

& = & E(X^{2}) - 2E(X)^{2} + E(X)^{2} \\

& = & E(X^{2}) - E(X)^{2}

\end{eqnarray*}

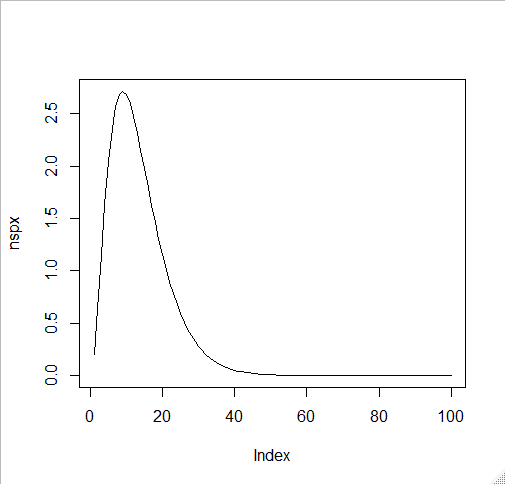

Var(X) 계산의 앞부분 $E[X^{2}]$ 부분은

$E(X^{2}) = \sum {x^{2} * P(X=x)}$

왜냐하면, $E(X) = \sum {x * P(X=x)}$ 이므로

이를 구한 후에 여기에서 $E[X]^{2}$ 를 빼준 값이 Var(X)

참고로 $E[X]^{2} = 25$

\begin{eqnarray*} E(X)^{2} & = & \left(\frac{1}{p}\right)^{2} \\ & = & (5^{2}) \\ & = & 25 \end{eqnarray*}

p <- .2 q <- 1-p # 100 trials trial <- c(1:100) # px = probability of geometric distribution px <- q^(trial-1)*p px # for variance, Var(X) = E(X^2)-E(X)^2 # E(X^2) = sum(x^2*p(X=x)) # 위에서 x 는 trial 에 해당하는 숫자 # after summing up the above # subtract E(X)^2, that is, squared value # of expected value of X # for the X^2 part in E(X^2) # that is, x^2*p(x) part nspx <- (trial^2)*px nspx # summing up the nspx # sum of x^2*p(x) plex <- cumsum(nspx) plex sumgeod <- data.frame(trial,px,nspx,plex) round(sumgeod,3) plot(nspx, type="l") plot(plex, type="l")

> p <- .2

> q <- 1-p

> trial <- c(1:100)

> px <- q^(trial-1)*p

> px

[1] 2.000000e-01 1.600000e-01 1.280000e-01 1.024000e-01 8.192000e-02 6.553600e-02 5.242880e-02

[8] 4.194304e-02 3.355443e-02 2.684355e-02 2.147484e-02 1.717987e-02 1.374390e-02 1.099512e-02

[15] 8.796093e-03 7.036874e-03 5.629500e-03 4.503600e-03 3.602880e-03 2.882304e-03 2.305843e-03

[22] 1.844674e-03 1.475740e-03 1.180592e-03 9.444733e-04 7.555786e-04 6.044629e-04 4.835703e-04

[29] 3.868563e-04 3.094850e-04 2.475880e-04 1.980704e-04 1.584563e-04 1.267651e-04 1.014120e-04

[36] 8.112964e-05 6.490371e-05 5.192297e-05 4.153837e-05 3.323070e-05 2.658456e-05 2.126765e-05

[43] 1.701412e-05 1.361129e-05 1.088904e-05 8.711229e-06 6.968983e-06 5.575186e-06 4.460149e-06

[50] 3.568119e-06 2.854495e-06 2.283596e-06 1.826877e-06 1.461502e-06 1.169201e-06 9.353610e-07

[57] 7.482888e-07 5.986311e-07 4.789049e-07 3.831239e-07 3.064991e-07 2.451993e-07 1.961594e-07

[64] 1.569275e-07 1.255420e-07 1.004336e-07 8.034690e-08 6.427752e-08 5.142202e-08 4.113761e-08

[71] 3.291009e-08 2.632807e-08 2.106246e-08 1.684997e-08 1.347997e-08 1.078398e-08 8.627183e-09

[78] 6.901746e-09 5.521397e-09 4.417118e-09 3.533694e-09 2.826955e-09 2.261564e-09 1.809251e-09

[85] 1.447401e-09 1.157921e-09 9.263367e-10 7.410694e-10 5.928555e-10 4.742844e-10 3.794275e-10

[92] 3.035420e-10 2.428336e-10 1.942669e-10 1.554135e-10 1.243308e-10 9.946465e-11 7.957172e-11

[99] 6.365737e-11 5.092590e-11

> ## for variance, Var(X) = E(X^2)-E(X)^2

> ## E(X^2) = sum(x^2*p(X=x))

> ## after summing up the above

> ## substract E(X)^2, that is, squared value

> ## of expected value of X

> nspx <- (trial^2)*(q^(trial-1))*p

> nspx

[1] 2.000000e-01 6.400000e-01 1.152000e+00 1.638400e+00 2.048000e+00 2.359296e+00 2.569011e+00

[8] 2.684355e+00 2.717909e+00 2.684355e+00 2.598455e+00 2.473901e+00 2.322718e+00 2.155043e+00

[15] 1.979121e+00 1.801440e+00 1.626925e+00 1.459166e+00 1.300640e+00 1.152922e+00 1.016877e+00

[22] 8.928224e-01 7.806662e-01 6.800208e-01 5.902958e-01 5.107712e-01 4.406535e-01 3.791191e-01

[29] 3.253461e-01 2.785365e-01 2.379321e-01 2.028241e-01 1.725589e-01 1.465404e-01 1.242298e-01

[36] 1.051440e-01 8.885318e-02 7.497677e-02 6.317987e-02 5.316912e-02 4.468865e-02 3.751613e-02

[43] 3.145910e-02 2.635147e-02 2.205030e-02 1.843296e-02 1.539448e-02 1.284523e-02 1.070882e-02

[50] 8.920298e-03 7.424542e-03 6.174844e-03 5.131698e-03 4.261739e-03 3.536834e-03 2.933292e-03

[57] 2.431190e-03 2.013795e-03 1.667068e-03 1.379246e-03 1.140483e-03 9.425461e-04 7.785568e-04

[64] 6.427752e-04 5.304151e-04 4.374889e-04 3.606772e-04 2.972193e-04 2.448202e-04 2.015743e-04

[71] 1.658998e-04 1.364847e-04 1.122418e-04 9.227042e-05 7.582485e-05 6.228826e-05 5.115057e-05

[78] 4.199022e-05 3.445904e-05 2.826955e-05 2.318457e-05 1.900845e-05 1.557992e-05 1.276608e-05

[85] 1.045747e-05 8.563983e-06 7.011443e-06 5.738841e-06 4.696008e-06 3.841704e-06 3.142039e-06

[92] 2.569180e-06 2.100268e-06 1.716542e-06 1.402607e-06 1.145833e-06 9.358629e-07 7.642068e-07

[99] 6.239059e-07 5.092590e-07

> ## summing up the nspx

> plex <- cumsum(nspx)

> plex

[1] 0.200000 0.840000 1.992000 3.630400 5.678400 8.037696 10.606707 13.291062 16.008971

[10] 18.693325 21.291781 23.765682 26.088400 28.243443 30.222564 32.024004 33.650929 35.110095

[19] 36.410735 37.563656 38.580533 39.473355 40.254022 40.934042 41.524338 42.035109 42.475763

[28] 42.854882 43.180228 43.458765 43.696697 43.899521 44.072080 44.218620 44.342850 44.447994

[37] 44.536847 44.611824 44.675004 44.728173 44.772862 44.810378 44.841837 44.868188 44.890239

[46] 44.908671 44.924066 44.936911 44.947620 44.956540 44.963965 44.970140 44.975271 44.979533

[55] 44.983070 44.986003 44.988434 44.990448 44.992115 44.993495 44.994635 44.995578 44.996356

[64] 44.996999 44.997529 44.997967 44.998327 44.998625 44.998870 44.999071 44.999237 44.999373

[73] 44.999486 44.999578 44.999654 44.999716 44.999767 44.999809 44.999844 44.999872 44.999895

[82] 44.999914 44.999930 44.999943 44.999953 44.999962 44.999969 44.999974 44.999979 44.999983

[91] 44.999986 44.999989 44.999991 44.999992 44.999994 44.999995 44.999996 44.999997 44.999997

[100] 44.999998

> sumgeod <- data.frame(trial,px,nspx,plex)

> round(sumgeod,3)

trial px nspx plex

1 1 0.200 0.200 0.200

2 2 0.160 0.640 0.840

3 3 0.128 1.152 1.992

4 4 0.102 1.638 3.630

5 5 0.082 2.048 5.678

6 6 0.066 2.359 8.038

7 7 0.052 2.569 10.607

8 8 0.042 2.684 13.291

9 9 0.034 2.718 16.009

10 10 0.027 2.684 18.693

11 11 0.021 2.598 21.292

12 12 0.017 2.474 23.766

13 13 0.014 2.323 26.088

14 14 0.011 2.155 28.243

15 15 0.009 1.979 30.223

16 16 0.007 1.801 32.024

17 17 0.006 1.627 33.651

18 18 0.005 1.459 35.110

19 19 0.004 1.301 36.411

20 20 0.003 1.153 37.564

21 21 0.002 1.017 38.581

22 22 0.002 0.893 39.473

23 23 0.001 0.781 40.254

24 24 0.001 0.680 40.934

25 25 0.001 0.590 41.524

26 26 0.001 0.511 42.035

27 27 0.001 0.441 42.476

28 28 0.000 0.379 42.855

29 29 0.000 0.325 43.180

30 30 0.000 0.279 43.459

31 31 0.000 0.238 43.697

32 32 0.000 0.203 43.900

33 33 0.000 0.173 44.072

34 34 0.000 0.147 44.219

35 35 0.000 0.124 44.343

36 36 0.000 0.105 44.448

37 37 0.000 0.089 44.537

38 38 0.000 0.075 44.612

39 39 0.000 0.063 44.675

40 40 0.000 0.053 44.728

41 41 0.000 0.045 44.773

42 42 0.000 0.038 44.810

43 43 0.000 0.031 44.842

44 44 0.000 0.026 44.868

45 45 0.000 0.022 44.890

46 46 0.000 0.018 44.909

47 47 0.000 0.015 44.924

48 48 0.000 0.013 44.937

49 49 0.000 0.011 44.948

50 50 0.000 0.009 44.957

51 51 0.000 0.007 44.964

52 52 0.000 0.006 44.970

53 53 0.000 0.005 44.975

54 54 0.000 0.004 44.980

55 55 0.000 0.004 44.983

56 56 0.000 0.003 44.986

57 57 0.000 0.002 44.988

58 58 0.000 0.002 44.990

59 59 0.000 0.002 44.992

60 60 0.000 0.001 44.993

61 61 0.000 0.001 44.995

62 62 0.000 0.001 44.996

63 63 0.000 0.001 44.996

64 64 0.000 0.001 44.997

65 65 0.000 0.001 44.998

66 66 0.000 0.000 44.998

67 67 0.000 0.000 44.998

68 68 0.000 0.000 44.999

69 69 0.000 0.000 44.999

70 70 0.000 0.000 44.999

71 71 0.000 0.000 44.999

72 72 0.000 0.000 44.999

73 73 0.000 0.000 44.999

74 74 0.000 0.000 45.000

75 75 0.000 0.000 45.000

76 76 0.000 0.000 45.000

77 77 0.000 0.000 45.000

78 78 0.000 0.000 45.000

79 79 0.000 0.000 45.000

80 80 0.000 0.000 45.000

81 81 0.000 0.000 45.000

82 82 0.000 0.000 45.000

83 83 0.000 0.000 45.000

84 84 0.000 0.000 45.000

85 85 0.000 0.000 45.000

86 86 0.000 0.000 45.000

87 87 0.000 0.000 45.000

88 88 0.000 0.000 45.000

89 89 0.000 0.000 45.000

90 90 0.000 0.000 45.000

91 91 0.000 0.000 45.000

92 92 0.000 0.000 45.000

93 93 0.000 0.000 45.000

94 94 0.000 0.000 45.000

95 95 0.000 0.000 45.000

96 96 0.000 0.000 45.000

97 97 0.000 0.000 45.000

98 98 0.000 0.000 45.000

99 99 0.000 0.000 45.000

100 100 0.000 0.000 45.000

> plot(nspx, type="l")

> plot(plex, type="l")

>

위에서 보듯이 plex column에 해당하는 것이 $E(X^2)$ 부분이고 이는 45가 된다 (45에 수렴한다). 또한 언급한 것처럼 $Var(X) = E(X^2) - E(X)^2$ 이고 $E(X)^2 = 25$ 이므로,

\begin{align*}

Var(X) & = E(X^2) - E(X)^2 \\

& = 45 - 25 \\

& = 20

\end{align*}

일반적으로

\begin{eqnarray*}

Var(X) = \displaystyle \frac{q}{p^{2}}

\end{eqnarray*}

아래는 이를 R에서 계산해 본 것

q/(p^2) [1] 20 >

Sum up

\begin{align} P(X = r) & = p * q^{r-1} \\ P(X > r) & = q^{r} \\ P(X \le r) & = 1 - q^{r} \\ E(X) & = \displaystyle \frac{1}{p} \\ Var(X) & = \displaystyle \frac{q}{p^{2}} \\ \end{align}

Proof of mean and variance of geometric distribution

$(4)$, $(5)$에 대한 증명은 Mean and Variance of Geometric Distribution

e.g.,

The probability that another snowboarder will make it down the slope without falling over is 0.4. Your job is to play like you’re the snowboarder and work out the following probabilities for your slope success.

- The probability that you will be successful on your second attempt, while failing on your first.

- The probability that you will be successful in 4 attempts or fewer.

- The probability that you will need more than 4 attempts to be successful.

- The number of attempts you expect you’ll need to make before being successful.

- The variance of the number of attempts.

$P(X = 2) = p * q^{2-1}$

$P(X \le 4) = 1 - q^{4}$

$P(X > 4) = q^{4}$

$E(X) = \displaystyle \frac{1}{p}$

$Var(X) = \displaystyle \frac{q}{p^{2}}$

> p <- .4 > q <- 1-p > > p*q^(2-1) [1] 0.24 > dgeom(1, p) [1] 0.24 > > 1-q^4 [1] 0.8704 > dgeom(0:3, p) [1] 0.4000 0.2400 0.1440 0.0864 > sum(dgeom(0:3, p)) [1] 0.8704 > pgeom(3, p) [1] 0.8704 > > q^4 [1] 0.1296 > 1-sum(dgeom(0:3, p)) [1] 0.1296 > 1-pgeom(3, p) [1] 0.1296 > pgeom(3, p, lower.tail = F) [1] 0.1296 > > 1/p [1] 2.5 > > q/p^2 [1] 3.75 >

Binomial Distributions

- 1번의 시행에서 특정 사건 A가 발생할 확률을 p라고 하면

- n번의 (독립적인) 시행에서 사건 A가 발생할 때의 확률 분포를

- 이항확률분포라고 한다.

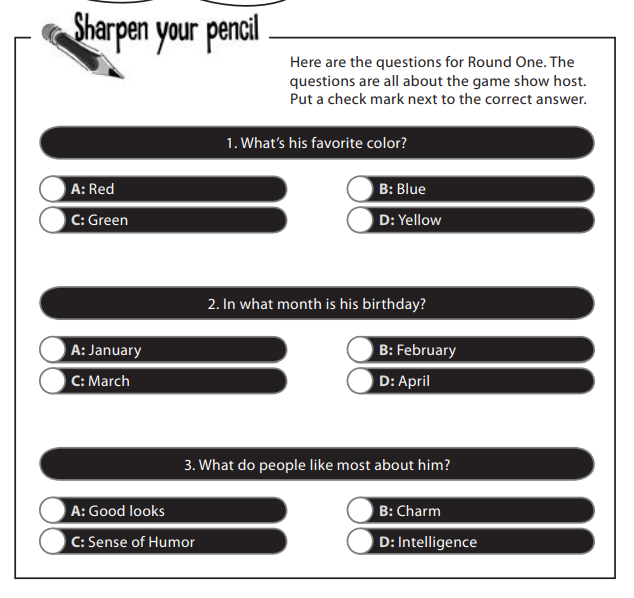

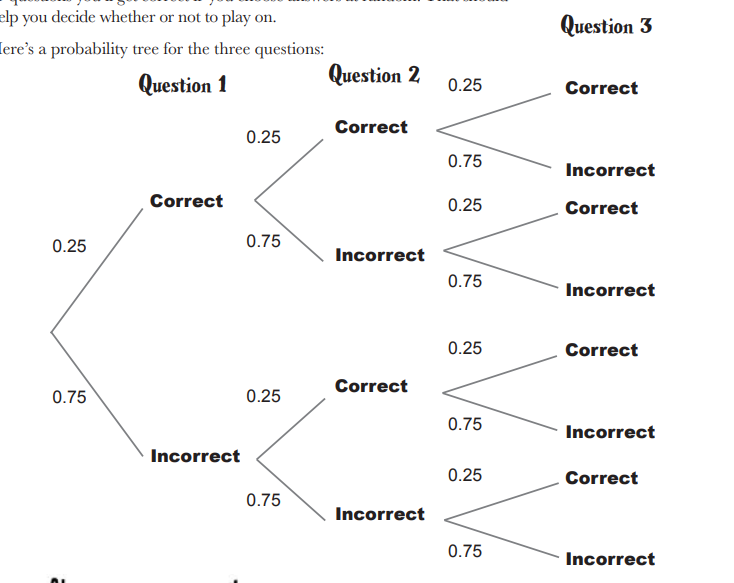

아래를 보면

- 각 한문제를 맞힐 확률은 1/4, 틀릴 확률은 3/4

- 3문제를 풀면서 (3번의 시행) 각 문제를 맞힐 확률 분포를 말한다.

| x | P(X=x) | power of .75 | power of .25 |

| 0 | 0.75 * 0.75 * 0.75 | 3 | 0 |

| 1 | 3 * (0.75 * 0.75 * 0.25) | 2 | 1 |

| 2 | 3 * (0.75 * 0.25 * 0.25) | 1 | 2 |

| 3 | 0.25 * 0.25 * 0.25 | 0 | 3 |

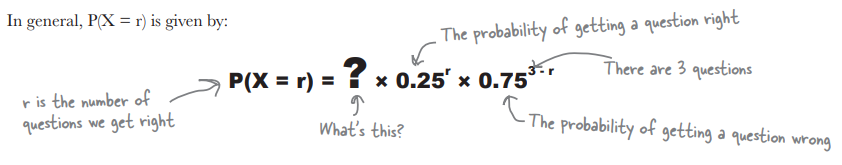

$$P(X = r) = {\huge\text{?} \cdot 0.25^{r} \cdot 0.75^{3-r}} $$

$$P(X = r) = {\huge_{3}C_{r}} \cdot 0.25^{r} \cdot 0.75^{3-r}$$

$_{n}C_{r}$은 n개의 사물에서 r개를 (순서없이) 고르는 방법의 수라고 할 때, 3개의 질문 중에서 한 개의 정답을 맞히는 방법은 $_{3}C_{1} = 3$ 세가지가 존재.

Probability for getting one question right

\begin{eqnarray*}

P(X = r) & = & _{3}C_{1} \cdot 0.25^{1} \cdot 0.75^{3-1} \\

& = & \frac{3!}{1! \cdot (3-1)!} \cdot 0.25 \cdot 0.75^2 \\

& = & 3 \cdot 0.25 \cdot 0.5625 \\

& = & 3 \cdot 0.25 \cdot 0.5625 \\

& = & 0.421875

\end{eqnarray*}

$$P(X = r) = _{n}C_{r} \cdot 0.25^{r} \cdot 0.75^{n-r}$$

$$P(X = r) = _{n}C_{r} \cdot p^{r} \cdot q^{n-r}$$

- You’re running a series of independent trials. (n번의 시행을 하게 된다)

- There can be either a success or failure for each trial, and the probability of success is the same for each trial. (각 시행은 성공/실패로 구분되고 성공의 확률은 (반대로 실패의 확률도) 각 시행마다 동일하다)

- There are a finite number of trials. Note that this is different from that of geometric distribution. (n번의 시행으로 한정된다. 무한대 시행이 아님)

X가 n번의 시행에서 성공적인 결과를 얻는 수를 나타낸다고 할 때, r번의 성공이 있을 확률을 구하려면 아래 공식을 이용한다.

\begin{eqnarray*} P(X = r) & = & _{n}C_{r} \cdot p^{r} \cdot q^{n-r} \;\;\; \text{Where,} \\ \displaystyle _{n}C_{r} & = & \displaystyle \dfrac {n!}{r!(n-r)!} \\ \text{c.f., } \\ \displaystyle _{n} P_{r} & = & \displaystyle \dfrac {n!} {(n-r)!} \\ \end{eqnarray*}

p = 각 시행에서 성공할 확률

n = 시행 숫자

r = r 개의 정답을 구할 확률

$$X \sim B(n,p)$$

Expectation and Variance of

Toss a fair coin once. What is the distribution of the number of heads?

- A single trial

- The trial can be one of two possible outcomes – success and failure

- P(success) = p

- P(failure) = 1-p

X = 0, 1 (failure and success)

$P(X=x) = p^{x}(1-p)^{1-x}$ or

$P(x) = p^{x}(1-p)^{1-x}$

참고.

| x | 0 | 1 |

| p(x) | q = (1-p) | p |

When x = 0 (failure), $P(X = 0) = p^{0}(1-p)^{1-0} = (1-p)$ = Probability of failure

When x = 1 (success), $P(X = 1) = p^{1}(1-p)^{0} = p $ = Probability of success

This is called Bernoulli distribution.

- Bernoulli distribution expands to binomial distribution, geometric distribution, etc.

- Binomial distribution = The distribution of number of success in n independent Bernoulli trials.

- Geometric distribution = The distribution of number of trials to get the first success in independent Bernoulli trials.

$$X \sim B(1,p)$$

\begin{eqnarray*} E(X) & = & \sum{x * p(x)} \\ & = & (0*q) + (1*p) \\ & = & p \end{eqnarray*}

\begin{eqnarray*} Var(X) & = & E((X - E(X))^{2}) \\ & = & \sum_{x}(x-E(X))^2p(x) \ldots \ldots \ldots E(X) = p \\ & = & (0 - p)^{2}*q + (1 - p)^{2}*p \\ & = & (0^2 - 2p0 + p^2)*q + (1-2p+p^2)*p \\ & = & p^2*(1-p) + (1-2p+p^2)*p \\ & = & p^2 - p^3 + p - 2p^2 + p^3 \\ & = & p - p^2 \\ & = & p(1-p) \\ & = & pq \end{eqnarray*}

For generalization,

$$X \sim B(n,p)$$

\begin{eqnarray*} E(X) & = & E(X_{1}) + E(X_{2}) + ... + E(X_{n}) \\ & = & n * E(X_{i}) \\ & = & n * p \end{eqnarray*}

\begin{eqnarray*} Var(X) & = & Var(X_{1}) + Var(X_{2}) + ... + Var(X_{n}) \\ & = & n * Var(X_{i}) \\ & = & n * p * q \end{eqnarray*}

e.g.,

In the latest round of Who Wants To Win A Swivel Chair, there are 5 questions. The probability of

getting a successful outcome in a single trial is 0.25

- What’s the probability of getting exactly two questions right?

- What’s the probability of getting exactly three questions right?

- What’s the probability of getting two or three questions right?

- What’s the probability of getting no questions right?

- What are the expectation and variance?

Ans 1.

p <- .25 q <- 1-p r <- 2 n <-5 # combinations of 5,2 c <- choose(n,r) ans1 <- c*(p^r)*(q^(n-r)) ans1 # or choose(n, r)*(p^r)*(q^(n-r)) dbinom(r, n, p) # dbinom(2, 5, 1/4)

> p <- .25 > q <- 1-p > r <- 2 > n <-5 > # combinations of 5,2 > c <- choose(n,r) > ans <- c*(p^r)*(q^(n-r)) > ans [1] 0.2636719 > > choose(n, r)*(p^r)*(q^(n-r)) [1] 0.2636719 > > dbinom(r, n, p) [1] 0.2636719 > >

Ans 2.

p <- .25 q <- 1-p r <- 3 n <-5 # combinations of 5,3 c <- choose(n,r) ans2 <- c*(p^r)*(q^(n-r)) ans2 choose(n, r)*(p^r)*(q^(n-r)) dbinom(r, n, p)

> p <- .25 > q <- 1-p > r <- 3 > n <-5 > # combinations of 5,3 > c <- choose(n,r) > ans2 <- c*(p^r)*(q^(n-r)) > ans2 [1] 0.08789062 > > choose(n,r)*(p^r)*(q^(n-r)) [1] 0.08789062 > > dbinom(r, n, p) [1] 0.08789063 > >

Ans 3. 중요

ans1 + ans2 dbinom(2, 5, .25) + dbinom(3, 5, .25) dbinom(2:3, 5, .25) sum(dbinom(2:3, 5, .25)) pbinom(3, 5, .25) - pbinom(1, 5, .25)

> ans1 + ans2 [1] 0.3515625 > dbinom(2, 5, .25) + dbinom(3, 5, .25) [1] 0.3515625 > dbinom(2:3, 5, .25) [1] 0.26367187 0.08789063 > sum(dbinom(2:3, 5, .25)) [1] 0.3515625 > pbinom(3, 5, .25) - pbinom(1, 5, .25) [1] 0.3515625 >

Ans 4.

p <- .25 q <- 1-p r <- 0 n <-5 # combinations of 5,3 c <- choose(n,r) ans4 <- c*(p^r)*(q^(n-r)) ans4

> p <- .25 > q <- 1-p > r <- 0 > n <-5 > # combinations of 5,3 > c <- choose(n,r) > ans4 <- c*(p^r)*(q^(n-r)) > ans4 [1] 0.2373047 >

Ans 5

p <- .25 q <- 1-p n <- 5 exp.x <- n*p exp.x

> p <- .25 > q <- 1-p > n <- 5 > exp.x <- n*p > exp.x [1] 1.25

p <- .25 q <- 1-p n <- 5 var.x <- n*p*q var.x

> p <- .25 > q <- 1-p > n <- 5 > var.x <- n*p*q > var.x [1] 0.9375 >

Q. 한 문제를 맞힐 확률은 1/4 이다. 총 여섯 문제가 있다고 할 때, 0에서 5 문제를 맞힐 확률은? dbinom을 이용해서 구하시오.

p <- 1/4 q <- 1-p n <- 6 pbinom(5, n, p) 1 - dbinom(6, n, p) sum(dbinom(0:5, n, p))

> p <- 1/4 > q <- 1-p > n <- 6 > pbinom(5, n, p) [1] 0.9997559 > 1 - dbinom(6, n, p) [1] 0.9997559 > sum(dbinom(0:5, n, p)) [1] 0.9997559 >

중요 . . . .

# http://commres.net/wiki/mean_and_variance_of_binomial_distribution # ################################################################## # p <- 1/4 q <- 1 - p n <- 5 r <- 0 all.dens <- dbinom(0:n, n, p) all.dens sum(all.dens) choose(5,0)*p^0*(q^(5-0)) choose(5,1)*p^1*(q^(5-1)) choose(5,2)*p^2*(q^(5-2)) choose(5,3)*p^3*(q^(5-3)) choose(5,4)*p^4*(q^(5-4)) choose(5,5)*p^5*(q^(5-5)) all.dens choose(5,0)*p^0*(q^(5-0)) + choose(5,1)*p^1*(q^(5-1)) + choose(5,2)*p^2*(q^(5-2)) + choose(5,3)*p^3*(q^(5-3)) + choose(5,4)*p^4*(q^(5-4)) + choose(5,5)*p^5*(q^(5-5)) sum(all.dens) # (p+q)^n # note that n = whatever, (p+q)^n = 1

> # http://commres.net/wiki/mean_and_variance_of_binomial_distribution > # ################################################################## > # > p <- 1/4 > q <- 1 - p > n <- 5 > r <- 0 > all.dens <- dbinom(0:n, n, p) > all.dens [1] 0.2373046875 0.3955078125 0.2636718750 0.0878906250 [5] 0.0146484375 0.0009765625 > sum(all.dens) [1] 1 > > choose(5,0)*p^0*(q^(5-0)) [1] 0.2373047 > choose(5,1)*p^1*(q^(5-1)) [1] 0.3955078 > choose(5,2)*p^2*(q^(5-2)) [1] 0.2636719 > choose(5,3)*p^3*(q^(5-3)) [1] 0.08789062 > choose(5,4)*p^4*(q^(5-4)) [1] 0.01464844 > choose(5,5)*p^5*(q^(5-5)) [1] 0.0009765625 > all.dens [1] 0.2373046875 0.3955078125 0.2636718750 0.0878906250 [5] 0.0146484375 0.0009765625 > > choose(5,0)*p^0*(q^(5-0)) + + choose(5,1)*p^1*(q^(5-1)) + + choose(5,2)*p^2*(q^(5-2)) + + choose(5,3)*p^3*(q^(5-3)) + + choose(5,4)*p^4*(q^(5-4)) + + choose(5,5)*p^5*(q^(5-5)) [1] 1 > sum(all.dens) [1] 1 > # > (p+q)^n [1] 1 > # note that n = whatever, (p+q)^n = 1 >

Proof of Binomial Expected Value and Variance

이항분포에서의 기댓값과 분산에 대한 수학적 증명, Mathematical proof of Binomial Distribution Expected value and Variance

Poisson Distribution

$$X \sim Po(\lambda)$$

단위 시간, 단위 공간에 어떤 사건이 몇 번 발생할 것인가를 표현하는 이산 확률분포

모수(population parameter).

- 단위시간 또는 단위공간에서 평균발생횟수

- lambda (λ)로 표시

- 한 시간 동안 은행에 다녀간 고객의 수

- 한 시간 동안 사무실에 걸려온 전화의 수

- 어떤 책의 한 페이지에 존재하는 오타의 수

- 팝콘 기계가 일주일 동안 고장나는 횟수

조건

- 개별 사건이 주어진 구간에 임의로 그리고 독립적으로 발생

- 일주일 동안

- 1마일마다 등 시간이나 공간

- 해당 구간에서 사건이 발생하는 수의 평균값이나 비율을 알고 있음 (lambda($\lambda$))

$$ P(X=r) = e^{- \lambda} \dfrac{\lambda^{r}} {r!},\qquad k = 0, 1, 2, . . ., $$

For curiosity,

\begin{eqnarray*}

\sum_{r=0}^{\infty} e^{- \lambda} \dfrac{\lambda^{r}} {r!}

& = & e^{- \lambda} \sum_{r=0}^{\infty} \dfrac{\lambda^{r}} {r!} \\

& = & e^{- \lambda} \left(1 + \lambda + \dfrac{\lambda^{2}}{2!} + \dfrac{\lambda^{3}}{3!} + . . . \right) \\

& = & e^{- \lambda}e^{\lambda} \\

& = & 1

\end{eqnarray*}

왜 $e^{\lambda} = \left(1 + \lambda + \dfrac{\lambda^{2}}{2!} + \dfrac{\lambda^{3}}{3!} + . . . \right)$ 인지는 Taylor series 문서를 참조.

이것이 의미하는 것은 r이 0에서 무한대로 갈 때의 확률값의 분포를 말하므로 전체 분포가 1이 됨을 의미한다. 아래 “What does the Poisson distribution look like?” 참조

> e <- exp(1) > e [1] 2.718282

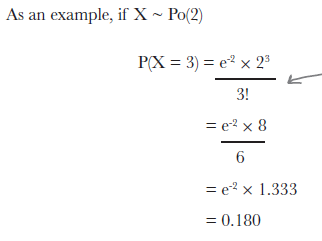

위의 그림은 lambda는 2, 즉 한달에 아주대학교 앞의 건널목 주변 찻길에서 교통사고가 날 횟수가 2회라고 할 때, X=3 이므로 3번 교통사고가 일어날 확률을 (P(X=3)) 묻는 문제이다.

\begin{eqnarray*}

P(X = 3) & = & e^{-2} * \frac {2^{3}}{3!} \\

& = & 0.180

\end{eqnarray*}

What does the Poisson distribution look like?

\begin{eqnarray*} P(X=r) = e^{- \lambda} \dfrac{\lambda^{r}} {r!},\qquad r = 0, 1, 2, . . ., \end{eqnarray*}

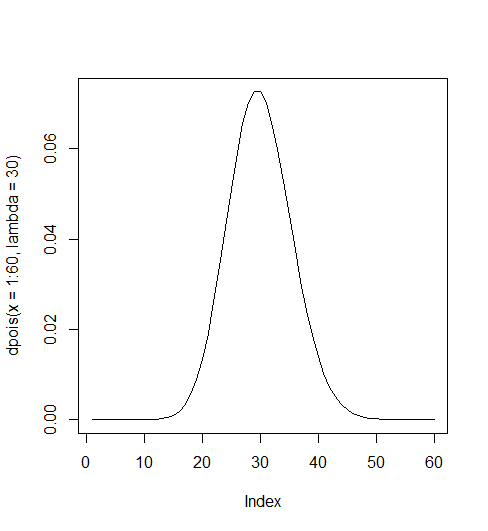

마포 신한은행 지점에 시간당 은행에 방문하는 손님의 숫자: lambda = 30

> dpois(x=1:60, lambda=30) [1] 2.807287e-12 4.210930e-11 4.210930e-10 3.158198e-09 1.894919e-08 [6] 9.474593e-08 4.060540e-07 1.522702e-06 5.075675e-06 1.522702e-05 [11] 4.152825e-05 1.038206e-04 2.395861e-04 5.133987e-04 1.026797e-03 [16] 1.925245e-03 3.397491e-03 5.662486e-03 8.940767e-03 1.341115e-02 [21] 1.915879e-02 2.612562e-02 3.407689e-02 4.259611e-02 5.111534e-02 [26] 5.897924e-02 6.553248e-02 7.021338e-02 7.263453e-02 7.263453e-02 [31] 7.029148e-02 6.589826e-02 5.990751e-02 5.285957e-02 4.530820e-02 [36] 3.775683e-02 3.061365e-02 2.416867e-02 1.859128e-02 1.394346e-02 [41] 1.020253e-02 7.287524e-03 5.084319e-03 3.466581e-03 2.311054e-03 [46] 1.507209e-03 9.620485e-04 6.012803e-04 3.681308e-04 2.208785e-04 [51] 1.299285e-04 7.495876e-05 4.242949e-05 2.357194e-05 1.285742e-05 [56] 6.887904e-06 3.625212e-06 1.875110e-06 9.534457e-07 4.767229e-07 > plot(dpois(x=1:60, lambda=30), type = "l") >

위에서 언급한

\begin{eqnarray*} \sum_{r=0}^{\infty} e^{- \lambda} \dfrac{\lambda^{r}} {r!} & = & e^{- \lambda} \sum_{r=0}^{\infty} \dfrac{\lambda^{r}} {r!} \\ & = & e^{- \lambda} \left(1 + \lambda + \dfrac{\lambda^{2}}{2!} + \dfrac{\lambda^{3}}{3!} + . . . \right) \\ & = & e^{- \lambda}e^{\lambda} \\ & = & 1 \end{eqnarray*}

에서 1 이란 이야기는 아래 그림의 그래프가 전체가 1이 됨을 의미함. 즉 위에서는 1부터 60까지 갔지만, 1부터 무한대로 하면 완전한 분포곡선이 되는데 이것이 1이라는 뜻 (가령 dpois(x=1:1000, lambda=30)과 같은 케이스).

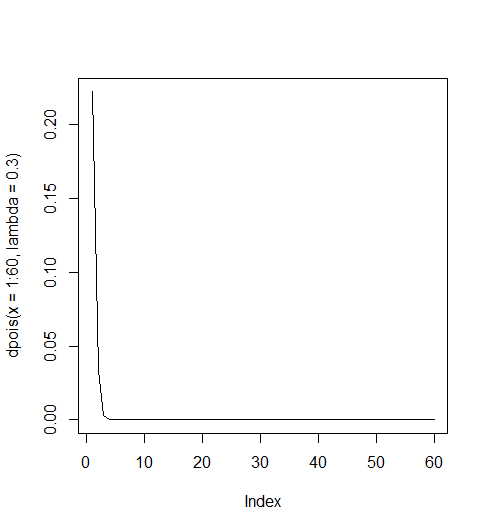

lambda가 클 수록 좌우대칭의 종형분포를 이루고 1), 작을 수록 오른 쪽으로 편향된 (skewed to the right) 혹은 양의방향으로 편향된(positively skewed) 분포를 2) 이룬다.

> dpois(x=1:60, lambda=.3) [1] 2.222455e-01 3.333682e-02 3.333682e-03 2.500261e-04 1.500157e-05 [6] 7.500784e-07 3.214622e-08 1.205483e-09 4.018277e-11 1.205483e-12 [11] 3.287682e-14 8.219204e-16 1.896739e-17 4.064441e-19 8.128883e-21 [16] 1.524166e-22 2.689704e-24 4.482840e-26 7.078168e-28 1.061725e-29 [21] 1.516750e-31 2.068296e-33 2.697777e-35 3.372222e-37 4.046666e-39 [26] 4.669230e-41 5.188033e-43 5.558607e-45 5.750283e-47 5.750283e-49 [31] 5.564790e-51 5.216991e-53 4.742719e-55 4.184752e-57 3.586930e-59 [36] 2.989108e-61 2.423601e-63 1.913370e-65 1.471823e-67 1.103867e-69 [41] 8.077076e-72 5.769340e-74 4.025121e-76 2.744401e-78 1.829600e-80 [46] 1.193218e-82 7.616283e-85 4.760177e-87 2.914394e-89 1.748636e-91 [51] 1.028610e-93 5.934286e-96 3.359030e-98 1.866128e-100 1.017888e-102 [56] 5.452971e-105 2.869985e-107 1.484475e-109 7.548177e-112 3.774089e-114 > plot(dpois(x=1:60, lambda=.3), type = "l") >

일반적으로 lambda가 1보다 작으면 geometric distribution 형태의 그래프를, 1보다 크면 정규분포 형태의 모양을 갖는다.

Exercise

Your job is to play like you’re the popcorn machine and say what the probability is of you malfunctioning a particular number of times next week. Remember, the mean number of times you break down in a week is 3.4.

- What’s the probability of the machine not malfunctioning next week?

- What’s the probability of the machine malfunctioning three times next week?

- What’s the expectation and variance of the machine malfunctions?

1. What’s the probability of the machine not malfunctioning next week?

$\lambda = 3.4$

$\text{malfunctioning} = 0$

\begin{eqnarray*} P(X=0) & = & e^{-3.4} * \frac{3.4^{0}} {0!} \\ & = & e^{-3.4} \\ & = & 0.03337327 \end{eqnarray*}

# R 에서 계산 > e^(-3.4) [1] 0.03337327 > # 혹은 > dpois(0, 3.4) [1] 0.03337327 >

포아송 분포를 따르는 확률에서 아무것도 일어나지 않을 때의 확률은 e-lambda 가 된다. 예를 들면 119 전화가 한시간에 걸려오는 확률이 5번이라고 할 때, 지난 한 시간동안 한 건의 전화도 없을 확률은?

\begin{eqnarray*}

P(X=0) & = & e^{-5} * \frac{5^{0}} {0!} \\

& = & e^{-5} \\

& = & 0.006737947

\end{eqnarray*}

> lamba <- 5 > e <- exp(1) > px.0 <- e^(-lamba) > > px.0 [1] 0.006737947 > # or > dpois(0,5) [1] 0.006737947

2. What’s the probability of the machine malfunctioning three times next week?

l <- 3.4 x <- 3 e <- exp(1) ans <- ((e^(-l))*l^x)/factorial(x)

> l <- 3.4 > x <- 3 > e <- exp(1) > ans <- ((e^(-l))*l^x)/factorial(x) > > ans [1] 0.2186172 >

위의 계산 대신 아래와 같은 function을 이용하는 것이 보통이다.

> dpois(x=3, lambda=3.4) [1] 0.2186172

마찬가지로 적어도 3번까지 고장나는 경우는 0, 1, 2, 3을 포함하므로

> sum(dpois(c(0:3), lambda=3.4)) [1] 0.5583571 >

3. What’s the expectation and variance of the machine malfunctions?

\begin{eqnarray*}

E(X) & = & \lambda \\

Var(X) & = & \lambda \\

& = & 3.4

\end{eqnarray*}

Two Poisson distribution cases

\begin{eqnarray*} X \sim Po(3.4) \\ Y \sim Po(2.3) \end{eqnarray*}

위의 조건일 때, Popcorn 기계와 coffee 기계가 한 주일 동안 고장나지 않을 확률을 구하려면 아래를 말한다.

\begin{eqnarray*}

P(X + Y = 0)

\end{eqnarray*}

여기서 X + Y의 분포는 아래와 같다.

\begin{eqnarray*}

X + Y \sim (\lambda_{x} + \lambda_{y})

\end{eqnarray*}

lambda의 합은 5.7이고 (아래 참조), 결국 lambda가 5.7일 때 X=0의 확률(probability)를 구하는 문제이므로 0.003

\begin{eqnarray*}

\lambda_{X} + \lambda_{Y} & = & 3.4 + 2.3 \\

& = & 5.7 \\

\end{eqnarray*}

$$X + Y \sim Po(5.7)$$

\begin{eqnarray*} P(X + Y = 0) & = & \frac {e^{- \lambda} \lambda^{r}} {r!} \\ & = & \frac {e^{-5.7} 5.7^{0}}{0!} \\ & = & e^{-5.7} \\ & = & 0.003 \end{eqnarray*}

Broken Cookies case

The Case of the Broken Cookies

Kate works at the Statsville cookie factory, and her job is to make sure that boxes of cookies meet the factory’s strict rules on quality control. Kate know that the probability that a cookie is broken is 0.1, and her boss has asked her to find the probability that there will be 15 broken cookies in a box of 100 cookies. “It’s easy,” he says. “Just use the binomial distribution where n is 100, and p is 0.1.”

Kate picks up her calculator, but when she tries to calculate 100!, her calculator displays an error because the number is too big. “Well,” says her boss, “you’ll just have to calculate it manually. But I’m going home now, so have a nice night.”

Kate stares at her calculator, wondering what to do. Then she smiles. “Maybe I can leave early tonight, after all.”

Within a minute, Kate’s calculated the probability. She’s managed to find the probability and has managed to avoid calculating 100! altogether. She picks up her coat and walks out the door.

How did Kate find the probability so quickly, and avoid the error on her calculator?

우선 위의 문제를 binomial distribution 문제로 생각하면 답은

\begin{eqnarray*}

P(r=15) & = & _{100}C_{15} * 0.1^{15} * 0.99^{85}\\

\end{eqnarray*}

라고 볼 수 있다.

\begin{eqnarray} X & \sim & B(n, p) \\ X & \sim & Po(\lambda) \end{eqnarray}

Poisson distribution을 대신 사용할 수 있으려면, B(n, p)와 Po(lambda)가 유사해야 한다. 두 distribution의 기대값과 분산값을 살펴보면,

- B(n, p)의 경우 E(X) = np

- Po(lambda)의 경우 E(X) = lambda 이고

- Var(X) = npq 이고

- Var(lambda) = lambda 이다.

따라서, 둘의 성격이 비슷하기 위해서는 npq 와 np가 같아야 한다. 따라서 q는 1이어야 하는데, 현실적으로 1일 수는 없으므로 1에 가깞고, n이 충분히 크다면 둘의 성격이 비슷해질 수 있다고 판단한다. 따라서,

- 만약 n이 충분히 크고

- p가 작으면 (q가 크면)

- $X \sim B(n, p)$와 $Y \sim Po(np)$는 비슷할 것이다.

- 보통은 n > 50인 경우, p = 0.1 보다 작은 경우가 위에 해당한다.

> dbinom(x=15, 100, 0.1) [1] 0.03268244 > choose(100, 15) [1] 2.533385e+17 > a <- choose(100, 15) > b <- .1^15 > c <- .9^85 > a*b*c [1] 0.03268244 >

위가 답이긴 하지만 limited calculator 로는

x ~ b (n, p)이고

b(100, 0.1)이므로

n*p = 10 = lambda

따라서 Pois 분포로 보는 답은

lambda = 10 일때 P(r=15)값을 구하는 문제로

\begin{eqnarray*} P(r = 15) & = & e^{-10} * \frac {10^{15}}{15!} \\ & = & 0.0347180 \end{eqnarray*}

> dpois(x=15, lambda=10) [1] 0.03471807 >

A student needs to take an exam, but hasn’t done any revision for it. He needs to guess the answer to each question, and the probability of getting a question right is 0.05. There are 50 questions on the exam paper. What’s the probability he’ll get 5 questions right? Use the Poisson approximation to the binomial distribution to find out.

만약에 binomial distribution 으로 계산을 한다면

> dbinom(x=5, 50, 0.05) [1] 0.06584064 >

Poisson distribution을 이용하라고 한다. . .

$ X \sim B(50, 0.05) $ 일 때, $P(X=5)$를 구하는 것. 이 때의 기대값 E(X)는 $ E(X) = np = 50 * .05 = 2.5 $ 이므로 위의 문제는

\begin{eqnarray*} X & \sim & Po(\lambda) \\ X & \sim & Po(2.5) \end{eqnarray*}

일 때, $P(X=5)$를 구하는 것과 같다.

> dpois(x=5, lambda = 2.5) [1] 0.06680094 >

수식을 따르면,

\begin{eqnarray*}

P(X = 5) & = & \frac {e^{-2.5} * 2.5^{5}}{5!} \\

& = & 0.067

\end{eqnarray*}

> n <- 50 > p <- .05 > q <- 1-p > x <- 5 > np <- n*p # Poisson distribution > e <- exp(1) > lambda <- np > lambda [1] 2.5 > a <- e^(-lambda) > b <- lambda^x > c <- factorial(x) > a*b/c [1] 0.06680094 >

Exercise

Here are some scenarios. Your job is to say which distribution each of them follows, say what the expectation and variance are, and find any required probabilities.

1. A man is bowling. The probability of him knocking all the pins over is 0.3. If he has 10 shots, what’s the probability he’ll knock all the pins over less than three times?

Binomial distribution 을 이용한다면,

\begin{eqnarray*}

X & \sim & B(n, p) \\

X & \sim & B(10, 0.3)

\end{eqnarray*}

\begin{eqnarray*} E(X) & = & np \\ & = & 10 * 0.3 \\ & = & 3 \end{eqnarray*}

\begin{eqnarray*} Var(X) & = & npq \\ & = & 10 * 0.3 * 0.7 \\ & = & 2.1 \end{eqnarray*}

r을 이용한다면 pbinom 을 이용한다.

> pbinom(q=2, 10, 0.3) [1] 0.3827828 >

손으로 계산을 한다고 하면,

$P(X=0), P(X=1), P(X=2)$를 구한 후 모두 더하여 P(X < 3)을 구한다.

\begin{eqnarray*} P(X = 0) & = & {10 \choose 0} * 0.3^0 * 0.7^10 \\ & = & 1 * 1 * 0.028 \\ & = & 0.028 \end{eqnarray*}

\begin{eqnarray*} P(X = 1) & = & {10 \choose 1} *0.3^1 * 0.7^9 \\ & = & 10 * 0.3 * 0.04035 \\ & = & 0.121 \end{eqnarray*}

\begin{eqnarray*} P(X = 2) & = & {10 \choose 2} * 0.3^2 * 0.7^8 \\ & = & 45 * 0.09 * 0.0576 \\ & = & 0.233 \end{eqnarray*}

\begin{eqnarray*} P(X<3) & = & P(X=0) + P(X=1) + P(X=2) \\ & = & 0.028 + 0.121 + 0.233 \\ & = & 0.382 \end{eqnarray*}

2. On average, 1 bus stops at a certain point every 15 minutes. What’s the probability that no buses will turn up in a single 15 minute interval?

위는 Poisson distribution 문제이므로 기대값과 분산값은 각각 lambda 값인 1 (15분마다 1대씩 버스가 온다고 한다)

\begin{eqnarray*} P(X=0) & = & \frac {e^{-1}{1^0}}{0!} \\ & = & \frac {e^-1 * 1}{1} \\ & = & .368 \end{eqnarray*}

3. 20% of cereal packets contain a free toy. What’s the probability you’ll need to open fewer than 4 cereal packets before finding your first toy?

이는 geometric distribution 문제이므로,

$$X \sim Geo(.2)$$

$P(X \le 3)$ 을 구하는 문제이므로

\begin{eqnarray*} P(X \le 3) & = & 1 - q^r \\ & = & 1 - 0.8^{3} \\ & = & 1 - 0.512 \\ & = & 0.488 \end{eqnarray*}

기대값과 분산은 각각 $1/p$, $q/p^2$ 이므로 $5$와 $20$.